Northwestern Engineering

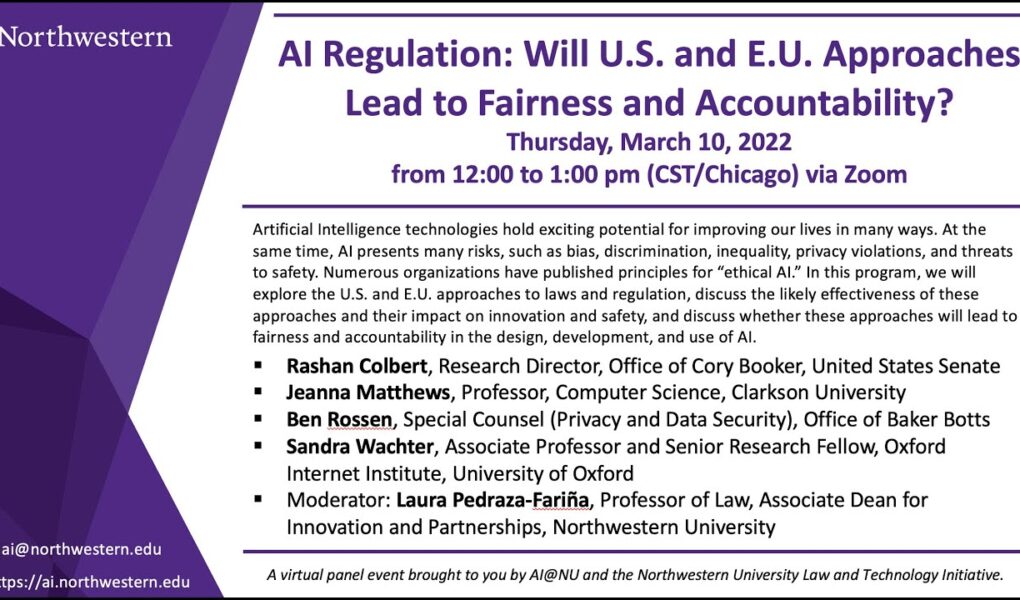

Artificial Intelligence technologies hold exciting potential for improving our lives in many ways. At the same time, AI presents many risks, such as bias, discrimination, inequality, privacy violations, and threats to safety. Numerous organizations have published principles for “ethical AI.” There is growing recognition, however, that principles are not enough, and many have begun to call for enforceable laws and regulation. In the United States, the Algorithmic Accountability Act (AAA) was introduced in 2019 and again in February 2022. The AAA would require covered entities to perform impact assessments. In the European Union, a broadly applicable risk-based approach has been proposed, with stringent regulation for high-risk uses and less regulation for minimal-risk systems.

This program explores the U.S. and E.U. approaches, discusses the likely effectiveness of these approaches and their impact on innovation and safety, and whether these approaches will lead to fairness and accountability in the design, development, and use of AI.

This important conversation included Rashan Colbert, Jeanna Matthews, Ben Rossen, and Sandra Wachter in a virtual panel forum moderated by Laura Pedraza-Fariña.

This virtual event was hosted by AI@NU and the Northwestern Law and Technology Initiative.

Source