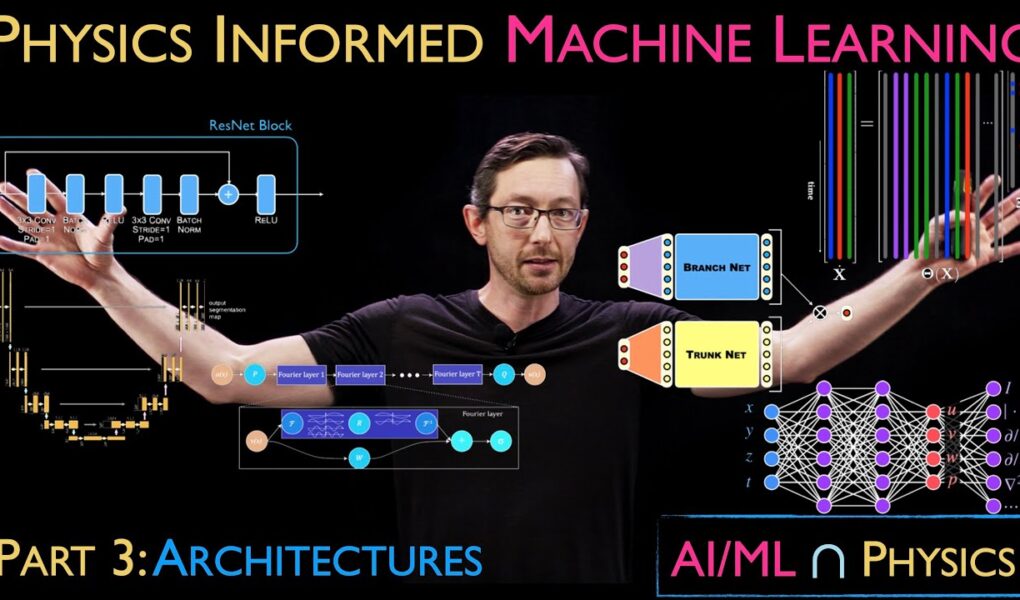

Steve Brunton

This video discusses the third stage of the machine learning process: (3) choosing an architecture with which to represent the model. This is one of the most exciting stages, including all of the new architectures, such as UNets, ResNets, SINDy, PINNs, Operator networks, and many more. There are opportunities to incorporate physics into this stage of the process, such as incorporating known symmetries through custom equivariant layers.

This video was produced at the University of Washington, and we acknowledge funding support from the Boeing Company

%%% CHAPTERS %%%

00:00 Intro

01:03 The Architecture Zoo/Architectures Overview

06:29 What is Physics?

12:38 Case Study: Pendulum

17:10 Defining a Function Space

20:51 Case Studies: Physics Informed Architectures

23:36 ResNets

24:26 UNets

25:15 Physics Informed Neural Networks

26:50 Lagrangian Neural Networks

27:24 Deep Operator Networks

27:49 Fourier Neural Operators

28:23 Graph Neural Networks

30:02 Invariance and Equivariance

35:59 Outro

Source

Turkish please

Steven, I think that, on top of references to Judea Pearl and his study ion Causality with the language/algebra he codified to compute around it, you'd want to consider Sherri Rose's and Mark Van Der Laan's Targeted Learning and their discussion about which data is actually useful to estimate a causally-interpreted coefficient amongst other things 🙂

Nice video on machine learning 😊😊

Nicee

but can we do some actual coding though

I can't believe this is free

Studying PINNs at the moment, your videos are helping a lot.

Thank you….

Just discovered the channel and I feel that I just found a treasure

Nice Dr. Brunton, Looking forward to those videos.

Happy to have some problem solving and predictive tools that fits everywhere in the electrical engineering.

Thank you

Whoa! I am blown away by your insights here, thank you for sharing them and making an effort to spread knowledge.

Another exceptional lecture as expected! I'm curious if you could consider delivering a lecture on LSTMs. There's some research highlighting their application in designing virtual sensors for wind energy applications. Thank you!

Thank you so much for this series ❤

Just one question – how does one develop an intuition to exactly know what kind of neural architecture they need and how to code it?

Great video and I learned a lot! One question open for anyone, what do you think about the prevalence of foundation models in vision and language modeling? Nowadays the state-of-the-art is to take a foundation model and fine-tune it to an application, which involves no problem-specific choice in architecture. Do you think there will be a large physics foundation model or that the choice of architecture is fundamentally application-specific? Cheers from someone working on vision.

i am starting my Master thesis work with the use of PINNs and this channel has being a great start and mind opener thanks prof Steve

32:34 invariance vs equivariance in a Neural Network architecture(NNA): would the transformation(g) be insitu or a post process?

by that I mean A) transformation(g) after f() is IN the NNA itself , or B) the "output of my neural network is also ran through" means the NNA's OUTPUT has a process ran AFTER it leaves the NNA???

If it's #A does that mean transformations themselves can be identify/labeled through equivariant NNA's separately from the content? (i.e. this dog is facing down a hill, this isn't a number one it's a dash character, etc)

If it can't label a transformation what's the point of the NNA transforming it's subject internally before Output if the original wasn't transformed?

If it's #B where transformations have to be done after, why ever bother mentioning it ,or doing it after, if the subject isn't transformed in the first place?

The explanation of the motivation to reducing data needed helps alot for choosing approaches, by using equivariance architecture(due to symmetry groups aka Lie group) , and makes a lot of sense just missing some intuition on what happens when , like transformations happening internally seems a waste of processing or a source of hallucinations if your not just trying to generate data.

Steve Brunton keeps mentioning hours and hours of material but I don't see it linked anywhere.. Does anyone know how we can access the mentioned material or if it is still being made?

Fantastic. I'm a newbie to the physics side but I have to say, this makes me wanna get involved. Thank you Steve!

2:25 This is blatantly wrong. Brains don't inspire ANNs. CS people famously reject them. Spiking Neural Networks are used by the neuroscientists, which are VERY different. The last time that kind of inspiration has occured is Lapicque's 1907 paper which came up with the ANNs and it was brief because biological neurons don't act like ANNs.

I love this lecture series so far. Is there a text version or some published "book" that would help describing "PINNs"? I have seen lots of disparate articles but not someone's seminal work on the topic. Just too new I suppose.