FHIOxford

http://www.winterintelligence.org/ Winter Intelligence 2012 Oxford University

Video thanks to Adam Ford, http://www.youtube.com/user/TheRationalFuture

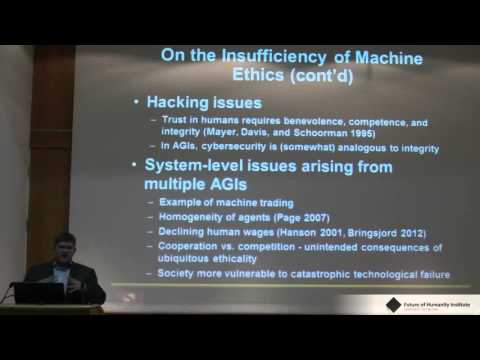

Extended Abstract: The gradually increasing sophistication of semi-autonomous and autonomous robots and virtual agents has led some scholars to propose constraining these systems’ behaviors with programmed ethical principles (“machine ethics”). While impressive machine ethics theories and prototypes have been developed for narrow domains, several factors will likely prevent machine ethics from ensuring positive outcomes from advanced, cross-domain autonomous systems. This paper critically reviews existing approaches to machine ethics in general and Friendly AI in particular (an approach to constraining the actions of future self-improving AI systems favored by the Singularity Institute for Artificial Intelligence), finding that while such approaches may be useful for guiding the behavior of some semi-autonomous and autonomous systems in some contexts, these projects cannot succeed in guaranteeing ethical behavior and may introduce new risks inadvertently. Moreover, while some incarnation of machine ethics may be necessary for ensuring positive social outcomes from artificial intelligence and robotics, it will not be sufficient, since other social and technical measures will also be critically important for realizing positive outcomes from these emerging technologies.

Building an ethical autonomous machine requires a decision on the part of the system designer as to which ethical framework to implement. Unfortunately, there are currently no fully-articulated moral theories that can plausibly be realized in an autonomous system, in part because the moral intuitions that ethicists attempt to systematize are not, in fact, consistent across all domains. Unified ethical theories are all either too vague to be computationally tractable or vulnerable to compelling counter-examples, or both. [1,2] Recent neuroscience research suggests that, depending on the context of a given decision, we rely to varying extents on an intuitive, roughly deontological (means-based) moral system and on a more reflective, roughly consequentialist (ends-based) moral system, which in part explains the aforementioned tensions in moral philosophy. [3] While the normative significance of conflicting moral intuitions can be disputed, these findings at least have implications for the viability of building a machine whose moral system would be acceptable to most humans across all domains, particularly given the need for ensuring the internal consistency of a system’s programming. Should an unanticipated situation arise, or if the system were used outside its prescribed domain, negative consequences will likely result due to the inherent fragility of rule-based systems.

Moreover, the complex and uncertain relationship between actions and consequences in the world means that an autonomous system (or, indeed, a human) with an ethical framework that is (at least partially) consequentialist cannot be relied upon with full confidence in any non-trivial task domain, suggesting the practical need for context-appropriate heuristics and great caution in ensuring that moral decision-making in society does not become overly centralized.[4] The intrinsic complexity and uncertainty of the world, along with other constraints such as the inability to gather the necessary data, also doom approaches (such as Friendly AI) to derive a system’s utility function from extrapolation of humans’ preferences. There is also a risk that the logical implications derived from premises in a given ethical system may not be what humans working on machine ethics principles believe them to be (this is one of the categories of machine ethics risks highlighted in Isaac Asimov’s work[5]). In other words, machine ethicists are caught in a double-bind: they must either depend on rigid principles for addressing particular ethical issues, and thus risk catastrophic outcomes when those rules should in fact be broken[6], or they allow an autonomous system to reason from first principles or derive its utility function in an evolutionary fashion, and thereby risk the possibility that it will arrive at conclusions that the designer would not have initially consented to. Lastly, even breakthroughs in normative ethics would not ensure positive outcomes from the deployment of explicitly ethical autonomous systems. Several factors besides machine ethics proper — such as ensuring that autonomous systems are robust against hacking, developing appropriate social norms and policies for ensuring ethical behavior by those involved in developing and using autonomous systems, and the systemic risks that could be arise from dependence on ubiquitous intelligent machines — are briefly described and suggested as areas for further research in light of the intrinsic limitations of machine ethics.

Source