Foundational Research Institute

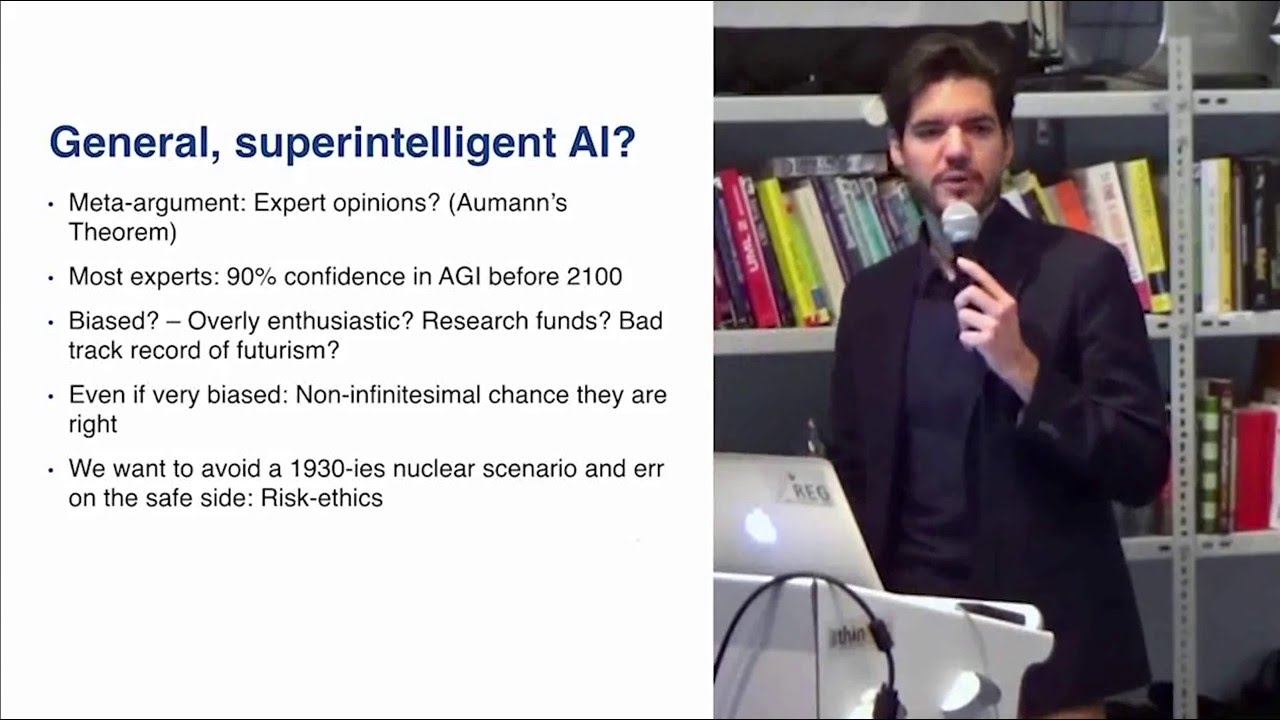

Why is AI likely to confront us with an overwhelmingly important ethical challenge? Is AI “just a tool”, or a potentially powerful and dangerous “agent”? Does controlling AI amount to “slavery”, or is it important “humane education”? Will superintelligent AIs consider humans uninteresting and leave us alone, as we do (or don’t in fact do…) with non-human animals? What’s the worst that could happen? Would “paperclip maximisers” lead to an inanimate future or create a lot of suffering too, much like (semi-)controlled AIs without a sufficient preference against suffering?

—

The Foundational Research Institute (FRI) explores strategies for alleviating the suffering of sentient beings in the near and far future. We publish essays and academic articles, and advise individuals and policymakers. Our current focus is on worst case scenarios and dystopian futures, such as risks of astronomical future suffering from artificial intelligence (AI). We are researching effective, sustainable and cooperative strategies to avoid dystopian outcomes. Our scope ranges from foundational questions about consciousness and ethics, such as which entities can suffer and how we should act under uncertainty, to policy implications for animal advocacy, global cooperation or AI safety.

contact@foundational-research.org

Source

Great talk! I agree that paperclippers would be worse than most people assume, although I think it remains an open question whether paperclippers would cause more suffering than a human-controlled future, since humans might create many more human-like minds than a paperclipper would.

Thinking about the (IMHO very remote) risk that AGI emerges in the next 15 years is indeed motivating, but it might bias people toward preferring a human-controlled outcome (so that we personally don't get killed), rather than evaluating the arguments on both sides from a purely altruistic standpoint.