Santa Fe Institute

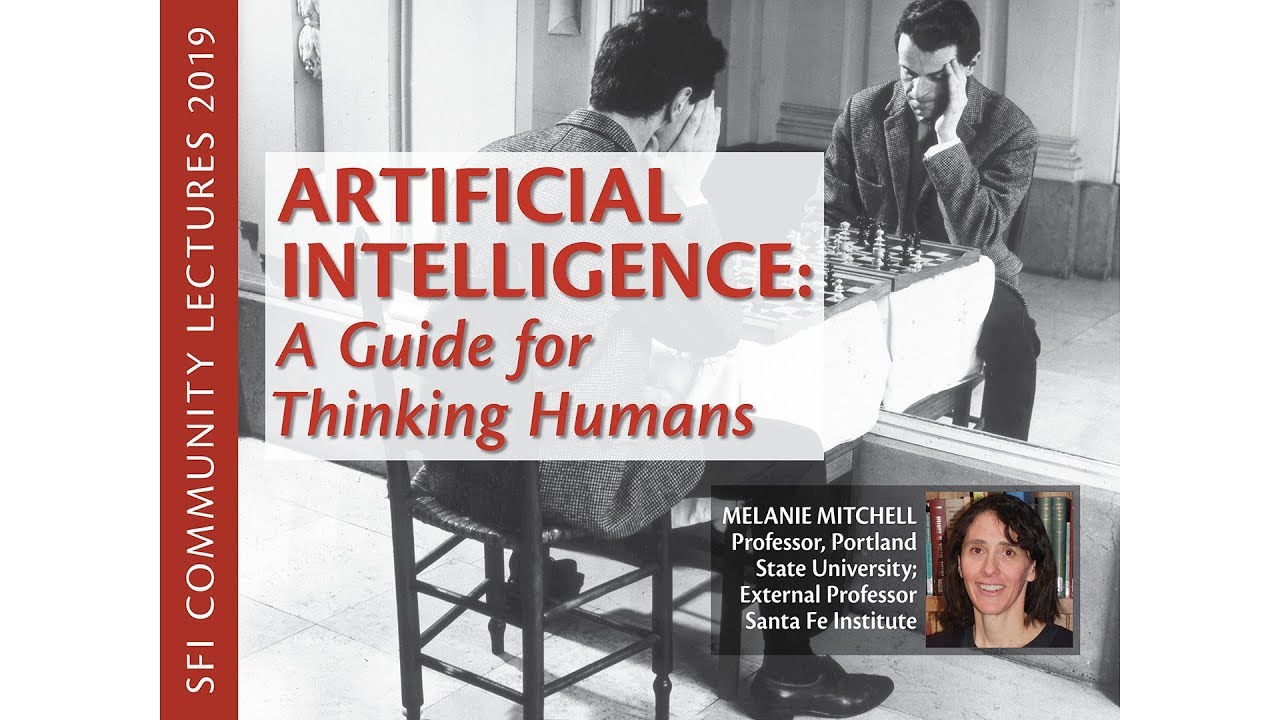

Melanie Mitchell

Portland State University, Santa Fe Institute

Artificial intelligence has been described as “the new electricity,” poised to revolutionize human life and benefit society even more than electricity did 100 years ago. AI has also been described as “our biggest existential threat,” a technology that could “spell the end of the human race.” Should we welcome intelligent machines or fear them? Or perhaps question whether they are actually intelligent at all? In this talk, researcher and award-winning author Melanie Mitchell describes the current state of AI, highlighting the field’s recent stunning achievements and its surprising failures. She considers the ethical issues surrounding the increasing deployment of AI systems in all aspects of our society, and closely examines the prospects for imbuing computers with humanlike qualities.

Melanie Mitchell is Professor at Portland State University and External Professor at the Santa Fe Institute. She is widely known for her research and teaching in artificial intelligence and complex systems. Her general-audience book, Complexity: A Guided Tour, won the 2010 Phi Beta Kappa Science Book Award and was named by Amazon as one of the ten best science books of 2009. Her newest book, Artificial Intelligence: A Guide for Thinking Humans, was recently published by Farrar, Straus, and Giroux.

Source

Lecture begins at 3:50

Excellent prez!

my ears thank you.

So if a Tesla is on autopilot on the highway and a vehicle in front is stationery, the Tesla was not trained to recognise that and stop?

How come the Tesla system did not have a parallel channel processing distance information from sensors and thereby establishing the danger and overriding to stop before it was too late?

There are cars with no autopilots, but with radar-controlled cruise control, that can do that. And my own car, even though it does not have that, and is pretty dumb, can still recognise an imminent collision, and begin braking for it.

So why would an intelligent car do worse?

Computers took over the world decades ago and we have been diligently serving them ever since. The fact that they are dumb has not been an impediment. They just leave the clever stuff to us menial humans as there are enough of us to do the trivial clever stuff for them. We build them, repair them, power them, program them, obey them, and can't live without them. A true symbiosis. Without them, the human population of 8 billion could not be supported, so there is no way back. Besides, no one wants to go back.

So the problem is, how to manage ourselves so as to not suffer too much from the dumbness of our machines. And this becomes especially important with (eg) autonomous cars (with the long tail of edge cases that defy categorisation and rely on deep human knowledge) and such things as the stupidity of the 737 Max 8 fiasco in which the humans forgot to do their part (ie applying intelligence) and so on.

Software and hardware is now ideological. This affects decisions when buying equipment and the software and firmware that it runs. Huawei is just the tip of the iceberg. It is sad that something so beneficial ends up being perverted by politics and power-plays between competing ideological blocks. It is sad that the internet has provided silos and echo chambers that have destroyed democracy. It is sad that the internet puts all our eggs in one basket thus making us prone to the same malware affecting millions of us, especially when our infrastructure is controlled and managed via the internet. This all is very sad for us engineers who have built this stuff with the aim of improving the lot of humankind.

The way to do self-driving cars is to simplify the environments in which they operate. Just like we do with trains and planes and boats. They need their own areas that are off-limits to pedestrians, cyclists, etc. We accept the fact that trains cannot stop and anyone who gets in the way of them is doomed. We may have to similarly accept this with autonomous vehicles. In the USA, there is more opportunity to separate vehicles and people. Not so in Europe. But if vehicles can be allowed to move at speed only on routes from which pedestrians and cyclists are forbidden, that would be a start.

Thanks nice tutorial i learnt something.

I subscribed.

Subscribe to my channel too.

Elephant in the room…. subjective conscious quale. Whether you believe in qualia or not, if the concept is real (if the Dennett/Hofstadter camp turn out to be wrong, and the Chalmers/Penrose camp right), if humans and other sentient minds think partly in the form of non-physical qualae, then we might face a big problem getting genuine AGI. Because there is no evidence that a computation based on deterministic processes can end up having subjective mental qualae. I know Jackson turned his back on his epistemological argument, but it still holds water, as do all the arguments in favour of fundamental subjective qualae made over the years by Chalmers. This is not to say machines cannot be arbitrarily as close to human level skill as we have the brute force compute resources to muster, but just that there could be basic obstacles, fundamental limits to any in-principle level of machine intelligence, assuming human intelligence is not completely mechanical — nor proofs, just sayin'. Certainly, I am not against trying to get AGI. It would make our lives immeasurably easier provided workers, not the oligarchs, get to control the AI. Our oligarchs are the existential threat, not the machines.

Question @1:04:00 a bit dumb? You do not need to worry too much about Chinese investment in AI. They borrowed "intellectual property" from the USA, the USA can "borrow" it from them. Once tech is put in the world, people can reverse engineer it. No one engineer or firm has a monopoly on ideas and the math behind it. That's one reason why "intellectual property" is a bullsh*t term, made up by lawyers to help capitalists profit on the work of the engineers. At best protecting IP with patents is a 2 or 3 year advantage. But patents on software should be illegal — it is akin to patenting a mathematical theorem (q.v. Richard Stallman and the four software freedoms). No one can stop me thinking, if I re-invent software someone has already patented then f__k them if they want to keep it secret, if I thought of the algorithm myself it is my property and I will share it free (libre) and open source.

About image recognition: the man in the photo with the Herbert Simon quote (09:30) is actually Dick Raaijmakers. Very interesting guy too 🙂

Would today's AI recognize an actor who is playing a role?

today I learned that Elon Musk is a liar because he's trying to speed up the process with AI

One can foresee a time when an advance AGI will determine which babies to cull at birth because their anticipated IQ will never reach beyond 100 so they are then sent to labor nurseries.