3Blue1Brown

Help fund future projects: https://www.patreon.com/3blue1brown

An equally valuable form of support is to simply share some of the videos.

Special thanks to these supporters: http://3b1b.co/nn3-thanks

Written/interactive form of this series: https://www.3blue1brown.com/topics/neural-networks

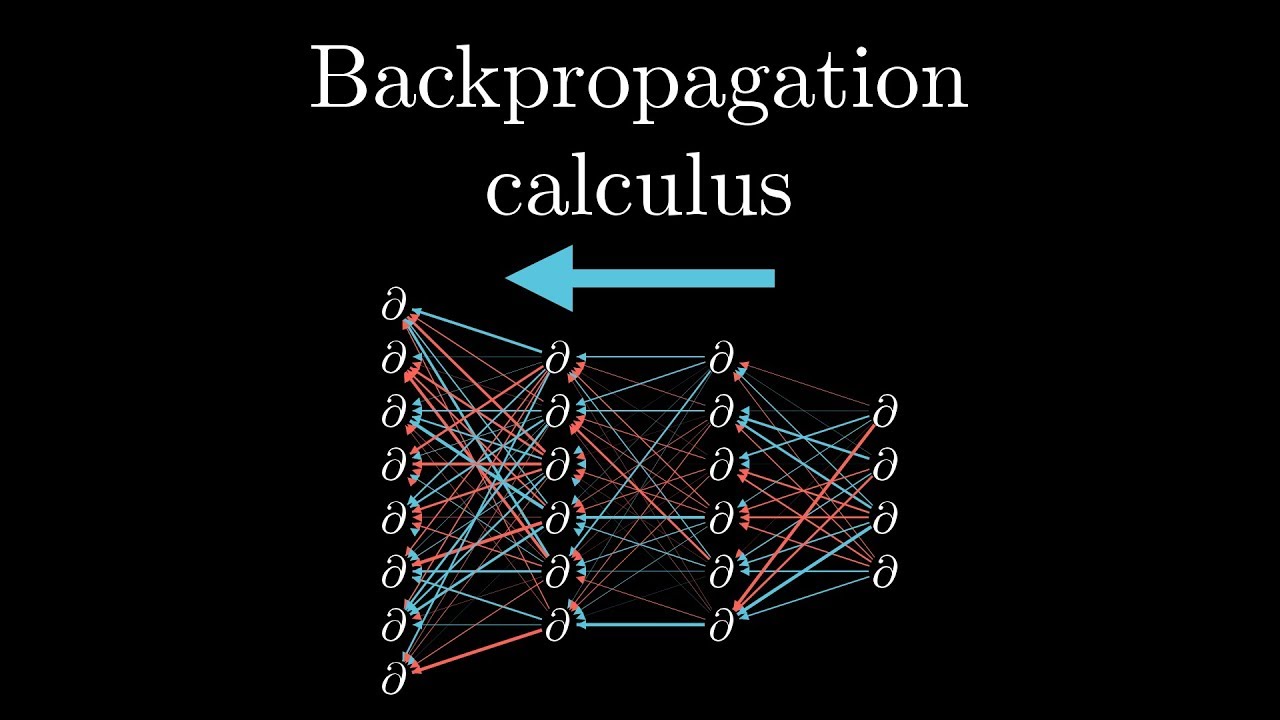

This one is a bit more symbol-heavy, and that’s actually the point. The goal here is to represent in somewhat more formal terms the intuition for how backpropagation works in part 3 of the series, hopefully providing some connection between that video and other texts/code that you come across later.

For more on backpropagation:

http://neuralnetworksanddeeplearning.com/chap2.html

https://github.com/mnielsen/neural-networks-and-deep-learning

http://colah.github.io/posts/2015-08-Backprop/

Music by Vincent Rubinetti:

https://vincerubinetti.bandcamp.com/album/the-music-of-3blue1brown

——————

Video timeline

0:00 – Introduction

0:38 – The Chain Rule in networks

3:56 – Computing relevant derivatives

4:45 – What do the derivatives mean?

5:39 – Sensitivity to weights/biases

6:42 – Layers with additional neurons

9:13 – Recap

——————

3blue1brown is a channel about animating math, in all senses of the word animate. And you know the drill with YouTube, if you want to stay posted on new videos, subscribe, and click the bell to receive notifications (if you’re into that): http://3b1b.co/subscribe

If you are new to this channel and want to see more, a good place to start is this playlist: http://3b1b.co/recommended

Various social media stuffs:

Website: https://www.3blue1brown.com

Twitter: https://twitter.com/3Blue1Brown

Patreon: https://patreon.com/3blue1brown

Facebook: https://www.facebook.com/3blue1brown

Reddit: https://www.reddit.com/r/3Blue1Brown

Source

Two things worth adding here:

1) In other resources and in implementations, you'd typically see these formulas in some more compact vectorized form, which carries with it the extra mental burden to parse the Hadamard product and to think through why the transpose of the weight matrix is used, but the underlying substance is all the same.

2) Backpropagation is really one instance of a more general technique called "reverse mode differentiation" to compute derivatives of functions represented in some kind of directed graph form.

How long will it take to read the Nielson's book?

Thank you very much.

There cannot be a better tutorial to understand back propagation than this video. Thanks again for the effort to make us understand the complex mathematical theory behind this.

This video is breathtaking…

Took me some time to understand, to backprop you need 3 things: the model, loss (scalar) and input vector

Thank you for such a simplified explanation. It truly helps:)

Thank you. For everything you do. This channel is a treasure

Where does it actually modify the weights? Next video?

At 5:31, why did you put the partial derivatives with respect to w and b in the same column? Shouldn't it be a two-column vector if you are accounting for 2 variables for all samples?

Beautiful animation, would like to see a behind the scene of how this was done. As I understood this is a specialized software you've build yourself for math animations?

why is a n_L-1 over the sigma at 7:36? for some reason i don't get this. In our example we have two j's: 0 and 1 or am I wrong?

I appreciate any form of help!

I, uh, what. I think I might need another year of math

Now that you have experienced the work of God in the last days, what exactly is God’s disposition? Do you dare to say that God is a God who only speaks? You dare not make such a rule. Some people also say that God is the God who opens mysteries, and God is the Lamb who breaks the seven seals. No one dares to make such a rule.

The mathematics is only true science, the rest is its applications!

ECE 449 UofA

It would be nice to see all these calculations with real numbers done, back to front. Maybe call it a Chapter 5 🙂

This is it. Finally it clicked. After countless hours of trying to understand this, I finally somewhat understand neural networks

4:00

god bless you for these amazing explanations and animations.

Basically, like any other gradient descent in other machine learning algorythm(linreg, logreg, etc), is just

Param := param – derrivative(cost function)

*note

:= is update

Just that in nn, the cost function is more massive, and looking complex

Is that the basic most fundamental concept ? Did i get it right ?

Thank you so much! I have understood more math from this channel than from all teachers I have had in high school or university in total.

Brilliant and Excellent.

Great video! I just don't know if I'm missing something, because I thought we didn't use this cost function when working with neural networks. As we activates every neuron with the sigmoid, the cost function defined as (a – y)^2 gives us a non-convex cost function wich has multiple local minimums. So a better choice is to use the cost function from logistic regression I think.

I'm not sure tho if I'm correct or if I'm really just missing something.

Amazing video anyway, you made me understand neural networks so much better with these series. I'm from Brazil and I'm taking machine learning classes here at my university, and I wouldn't be able to understand backpropagation without you, thank you very much!

easy to digest video

How easy it is to understand this through your lectures in just 10minutes. THANK YOU.

Thanks

I feel that the video could have benefitted a lot by going into the L-1 layer weights. I'm still a bit confused as to how they're computed in the backward pass

Man, I really appreciate your fantastic work! Do you read the texts when you record, or do you speak spontaneously? You are very good at that, and I am very bad. 😊That's why I wanted to ask you, maybe you should make a tutorial about that, too 😬

3:42 That's not really the chain rule, because it works differently with partial derivatives. In this case, the other terms are zero, so you're left out with that formula, which coincidentally looks the same as the one from single-variable calculus.

Funny to see the number of views drop from video to video.

Congrats every one!

I think I'm in love with you, amazing teacher!

One of the best videos watched. Well explained ..

thanks a lot this helped me a lot understanding what is going on with gradiant descent , and sure yall dont hesitate to watch this video multiple times to get it right

From what I understand, finding the global minima of cost function is what we need, does gradient descent ensure that. I think it would end up in local minima.

POV: You look away for a few minutes in class.

Thank you so much for this video. This is the best explanation of backprop algo and neural networks.

how to you aply nudges to the weights though ? You didn't explain that at all

Great video, missing a few things though.

Ok, so I've calculated how sensitive each weight and bias are, but how much do I actually tweak them by?

0:38 코딩으로 구현

is it a mistake on the last slide that del cost/del activation is a summation with terms from layer (L+1)? i thought that it should go backwards and thus be (L-1)?

edit: nvm i see

GREAT I GOT IT FROM FIRST TIME

One doesn't need synapses to say that an image of a 2 is NOT a 1, and not a 3 and not a 4 and not a 5 and so on, but one need the synapses that tells you if it can NOT be a 2.

Thanks a lot for creating such a fantastic content! Anticipating to see more videos about AI, ML, Deep Learning!

This explanation is so much better than the rest of the internet. Big fan of the channel.

10k of your views on this video are going to be my chimp brain trying to understand backpropagation 🤣😂

This is truly awesome, as pedegogy and as math and as programming and as machine learning. Thank you! …one comment about layers, key in your presentation is the one-neuron-per-layer, four layers. And key in the whole idea of the greater description of the ratio of cost-to-weight/cost-to-bias analysis, is your L notation (layer) and L – 1 notation. Problem, your right most neuron is output layer or "y" in your notation. So one clean up in the desction is to make some decisions: the right most layer is Y the output (no L value), because C[0]/A[L] equals 2(A[L] – y). So the right most three neurons, from right to left, should be Y (output), then L then L minus one, then all the math works. Yes?