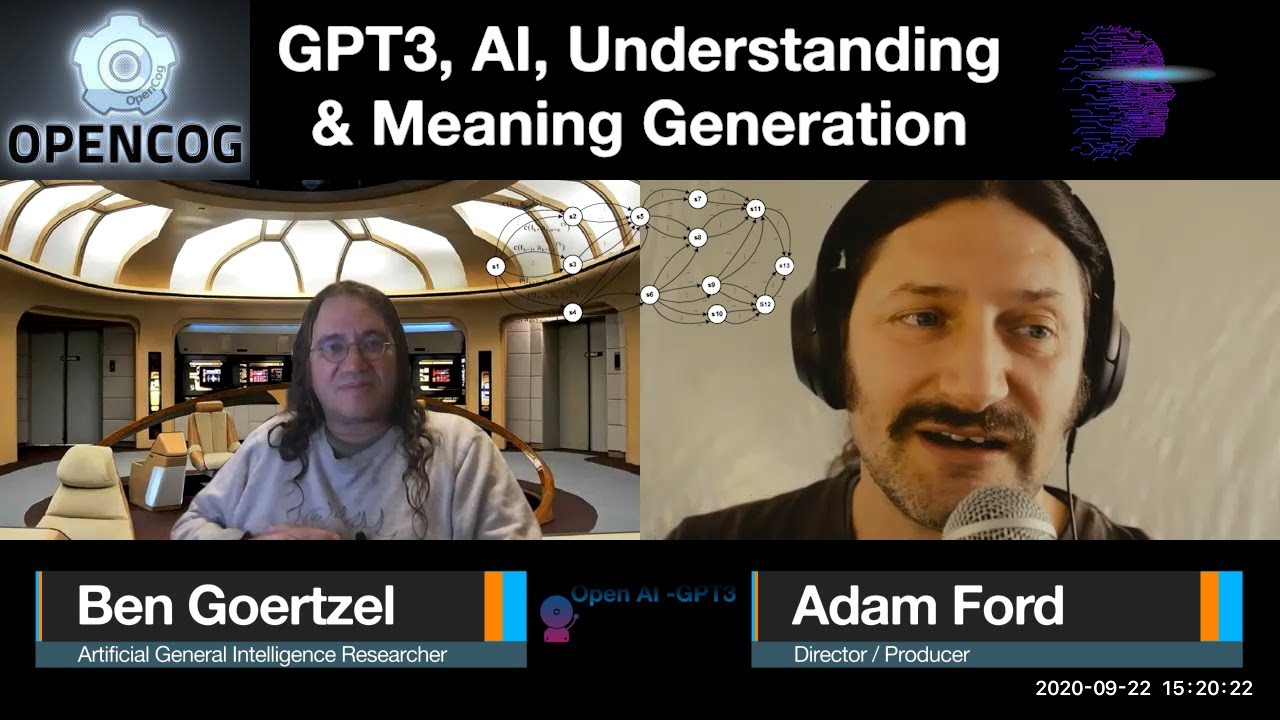

Science, Technology & the Future

Ben Goertzel is interviewed by Adam Ford on the current state of play on the road to AGI – the need for AI to generate concise abstract representations. Amazingly funky text generation using transformer networks (GPT-3 as a popular example). What is missing in AI? Symbol grounding, the meaning of meaning and understanding, proto- transfer learning and more.

01:14 Is #GPT3 on the direct path to #AGI?

04:37 Interesting and crazy output of GPT3 – Conjuring Philip K Dick through transformer neural net experimentation

09:26 Faking understanding .. Propensity for GPT3 or other transformer ANNs to produce gibberish some of the time reduces practical real world use.

13:16 GPT3 training data contains distillations of human understanding. Difficulties in developing generative document summarizers.

15:33 Occam’s Razor & whether adding vastly more amounts of parameters make a remarkable difference in transformer network capability

23:46 Transformer models in music

27:13 What’s missing in AI? Symbol grounding and abstract representation

30:34 Minimum requirements for symbol grounding in AGI – need for systems that can generate compact abstract representations

34:57 Paper: Symbol Grounding via Chaining of Morphisms https://arxiv.org/abs/1703.04368

39:52 Paper: Grounding Occam’s Razor in a Formal Theory of Simplicity https://arxiv.org/abs/2004.05269

46:12 OpenCog Hyperon https://wiki.opencog.org/w/Hyperon

50:44 What is meaning? Are compact abstract representations required for meaning generation?

54:51 What are symbols? How are they represented in transformer networks? How would they ideally be represented in an AGI system?

59:08 Understanding, compression and Occam’s Razor – and the need for compact abstract representations in order to achieve generalization

1:03:08 Integrating large transformer ANNs – a modular approach

1:08:43 Proto transfer learning using concise abstract representations

1:12:15 What’s missing in AI atm? What’s on the horizon?

1:14:43 Other AGI projects – “Replicode: A Constructivist Programming Paradigm and Language” – Kristinn R. Thórisson: https://zenodo.org/record/7009

1:14:43 Graph processing units are here (the singularity must be near!)

1:20:28 Why people think it’s impossible to achieve AGI this century

1:24:46 The prospect of living to see AGI occur

1:26:04 Superintelligent singleton hard takeoffs and race conditions between competing AGI projects

1:28:49 Centralized AGI development vs it being in the hands of a teaming mass of unorganized humans

1:30:14 The Trump/Biden presidential elections

1:31:28 Looking forward to an AGI ‘RObama’ run government

#OccamsRazor #AI #Superintelligence

Many thanks for tuning in!

Consider supporting SciFuture by:

a) Subscribing to the SciFuture YouTube channel: http://youtube.com/subscription_center?add_user=TheRationalFuture

b) Donating

– Bitcoin: 1BxusYmpynJsH4i8681aBuw9ZTxbKoUi22

– Ethereum: 0xd46a6e88c4fe179d04464caf42626d0c9cab1c6b

– Patreon: https://www.patreon.com/scifuture

c) Sharing the media SciFuture creates

Kind regards,

Adam Ford

– Science, Technology & the Future – #SciFuture – http://scifuture.org