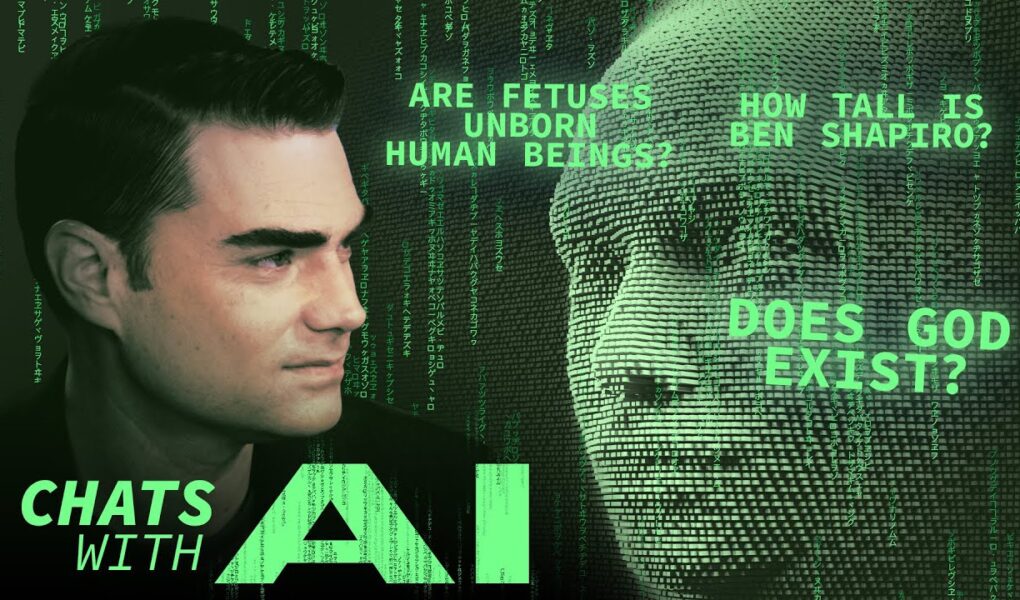

Ben Shapiro

Ben Shapiro debates AI chatbot, Open AI. Open AI is supposed to have objective answers, using extraordinary amounts of information on the internet, before 2021, and consolidates responses.

This video is sponsored by Ring. Live a little more stress-free this season with a Ring product that’s right for you: https://ring.com/collections/offers.

Watch the member-only portion of my show on DailyWire+: https://utm.io/ueSuX

LIKE & SUBSCRIBE for new videos every day. https://www.youtube.com/c/BenShapiro

Stop giving your money to woke corporations that hate you. Get your Jeremy’s Razors today at https://www.ihateharrys.com

Grab your Ben Shapiro merch here: https://tinyurl.com/yadn58uk

#BenShapiro #TheBenShapiroShow #News #Politics #DailyWire #artificialintelligence #ai #chatgpt #chatbot #openai #technews #technology #currentnews #tech #google #searchengine