CIIR Talk Series

Speaker: Rodrigo Nogueira, UNICAMP Brazil and NeuralMind

Website: https://sites.google.com/site/rodrigofrassettonogueira/

Faculty Host: Hamed Zamani, Assistant Professor at UMass Amherst

Talk Title: Computational Tradeoffs in the Age of Neural Information Retrieval

Abstract:

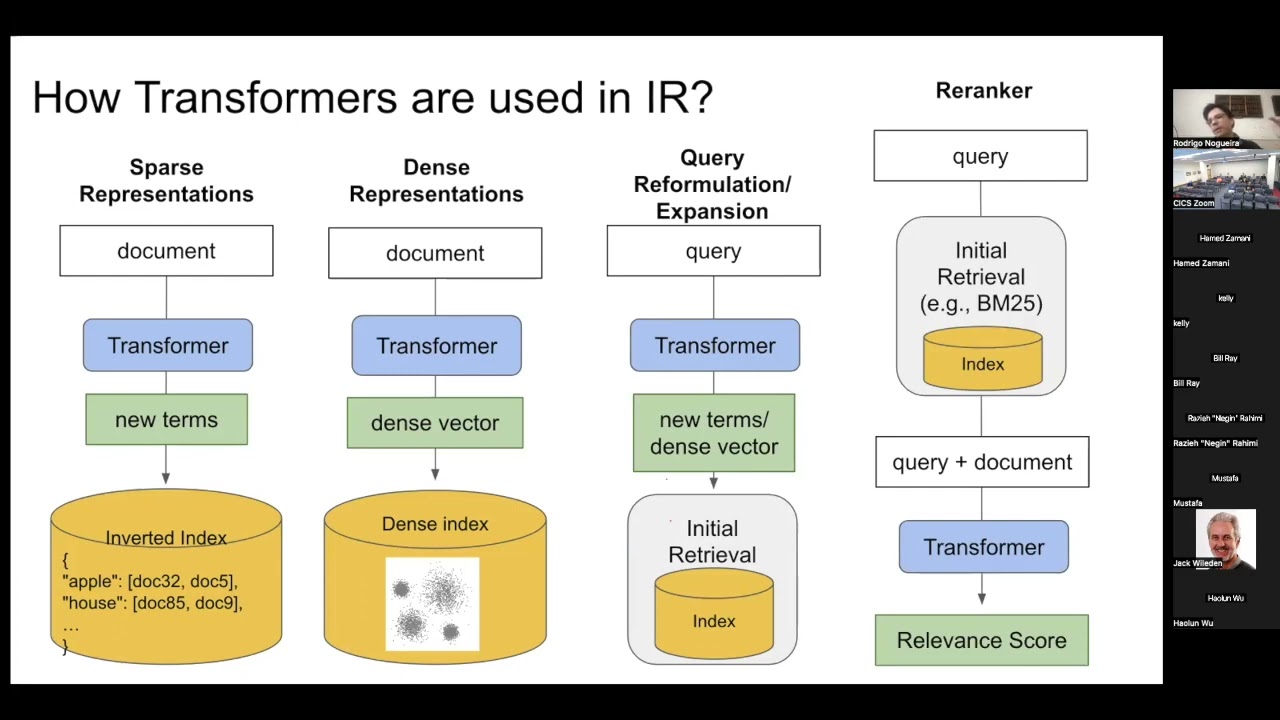

A remarkable trait of information retrieval tasks, in comparison to NLP tasks, is their computationally intensive nature, which becomes even more pronounced in the age of large language models. In this talk, we will discuss three themes involving computational trade-offs that IR practitioners and researchers often deal with when using pretrained Transformers. In the first part, we will discuss different ways of using neural networks in a search engine pipeline and their trade-offs in terms of effectiveness (quality of results) and efficiency (e.g. query latency, indexing time). We will compare their generalization to new domains and show that a special type of dense retrievers have poor generalization abilities. In the second part, we will present two ways of using expensive models (such as GPT-3) in IR tasks while avoiding their impeditive computational costs at indexing and retrieval times. In the third part, we will see how we can combine the findings from the first two parts to build a search engine that outperforms Google (with the help of Google and Bing).

Bio:

Rodrigo Nogueira is an adjunct professor at UNICAMP (Brazil), a senior research scientist at NeuralMind (Brazil), and a consultant to multiple companies that want to improve search using deep learning. He is one of the authors of the book “Pretraining Transformers for Text Ranking” and a pioneer in using pretrained Transformers in IR tasks. He holds a Ph.D. from New York University (NYU), where he worked on the intersection of Deep Learning, Natural Language Processing, and Information Retrieval under the supervision of prof. Kyunghyun Cho.