Big Think

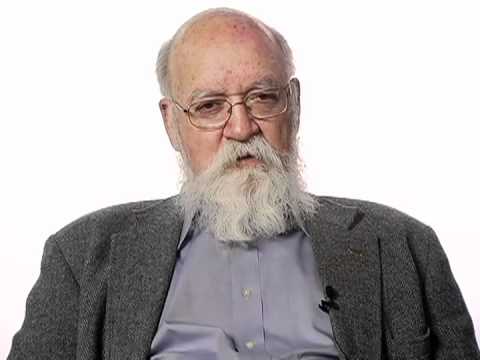

Daniel Dennett with the argument against humanoid robots.

Question: Are you an advocate of furthering AI research?

Daniel Dennett: I think that it’s been a wonderful field and has a great future, and some of the directions are less interesting to me and less important theoretically, I think, than others. I don’t think it needs a champion. There’s plenty of drive to pursue this research in different ways.

What I don’t think it’s going to happen and I don’t think it’s important to try to make it happen; I don’t think we’re going to have a really conscious humanoid agents anytime in the foreseeable future. And I think there’s not only no good reason to try to make such agents, but there’s some pretty good reasons not to try. Now, that might seem to contradict the fact that I work on a Cog project with MIT, which was of course is an attempt to create a humanoid agent, cogent, cog, and to implement the multiple drafts model of consciousness; my model of consciousness on it.

We sort of knew we weren’t going to succeed, but we’re going to learn a lot about what had to go in there. And that’s what made it interesting; is that we could see by working on an actual project, what’s some of the really most demanding contingencies and requirements and dependencies were.

It’s proof of concept. You want to see what works but then you don’t have to actually do the whole thing.

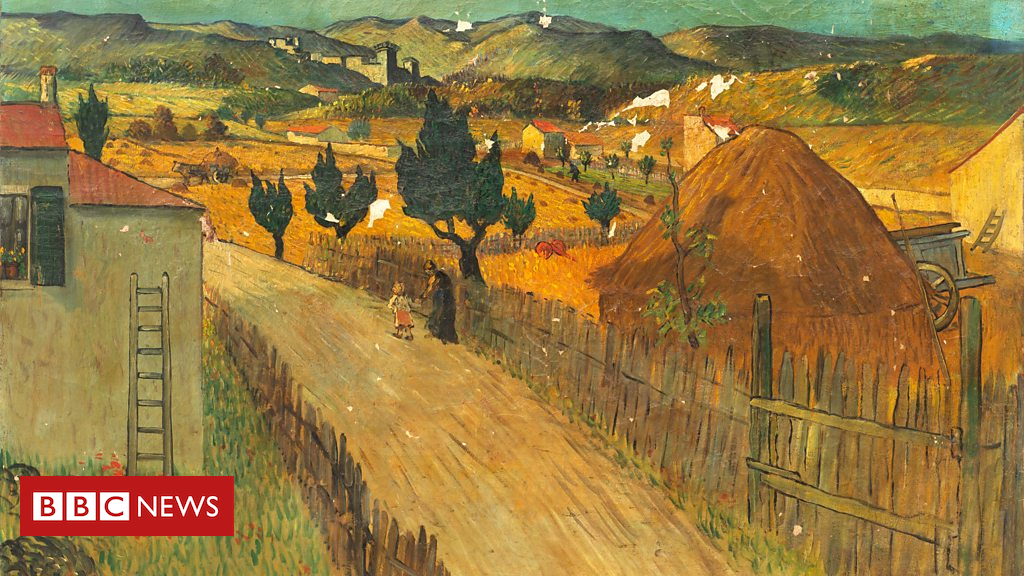

I compare this to; imagine the task of robotics, of designing and building a robotic bird which could fly around and you know, weigh three or four ounces, could fly around the room, could catch flies, and land on a twig.

Is it possible in principle to make such a robotic bird? I think possible in principle.

What would it cost? Oh much more than sending people to the moon. It will dwarf the Manhattan Project. It would be a huge effort and we wouldn’t learn that much.

We can learn by doing the parts, by understanding bird flight and bird navigation, we can do that without ever putting it all together, which would be a colossal expense and not worth it.

There’s plenty of birds, we don’t need that, we don’t need to make any and we can make quasi birds. In fact, they are making little tiny robots surveillance flying things, they don’t perfectly mimic birds, they don’t have to. And that’s the way as I should go as well.

Recorded on Mar 6, 2009.

Source

has this guy heard of Myon?

why duplicate humans? there is no reason to do it; we have billions of humans already. Make something better and more useful

and Charles Darwin was a thief who stole his assistants work and studies and claimed it as his on; and it does not stand up against the archaelogy that has been buried by the Smithsonian Inst.

and no nasa astronaut went to the moon and curiosity did not go to mars; those are just money scams

You can go to MIT and shoot a laser to bounce off the reflector that's located on the Moon.

I guess your claim has a good explanation for that.

I've been researching Kurzweil extensively, now it's time to see what Dan has to say.

The human brain is a complex computer, without free will. Can't computers be considered AI? They can still "learn" by having programs and data saved into them. Much like humans learn by receiving stimulus input and saving it in our brain.

There is a big difference between constructing a generic AI computer and an AI agent. We ideally want the former, an all-purpose tool that can compute things without the heavy workload of trying to figure out how to teach it. It could learn by itself. An AI agent is not useful to us, and that is where Dennett's argument comes in. Give me your results and do the tasks that I tell you. If my commands are not specific enough, ask me to specify. Don't do things with your own "initiative". That's where problems can occur. But a generic AI machine would be infinitely useful. It would be the greatest tool ever created.

I always like to think like this –

Imagine a computer that's at least as complex as a human brain. It has to defrag itself. If it can think, and by definition I think it can, then exactly what would it do when it defrags? What would it do physically and what would it be "thinking" while it was rearranging that data? All that sensory input, all those images and sounds.

The data would have to be partially read in order to do so, and I expect the amount of processing power would be a lot. It would have to shut down physically at least partially for stretches of the day in order to get it done.

This I imagine would be like sleep, and those dreams would be those of the electric sheep variety…

Any objections?

LAME IN THE BRAIN knows a lot about artificial intelligence one can only say that his "intelligence" is already there.

There are robotic birds that fly like real birds. There's a video of one demonstrated in a TED talk 7 years ago.

of course there are good reasos to try if we ca understad the humanmidn we can improe o it and remoe limitations.