Henry AI Labs

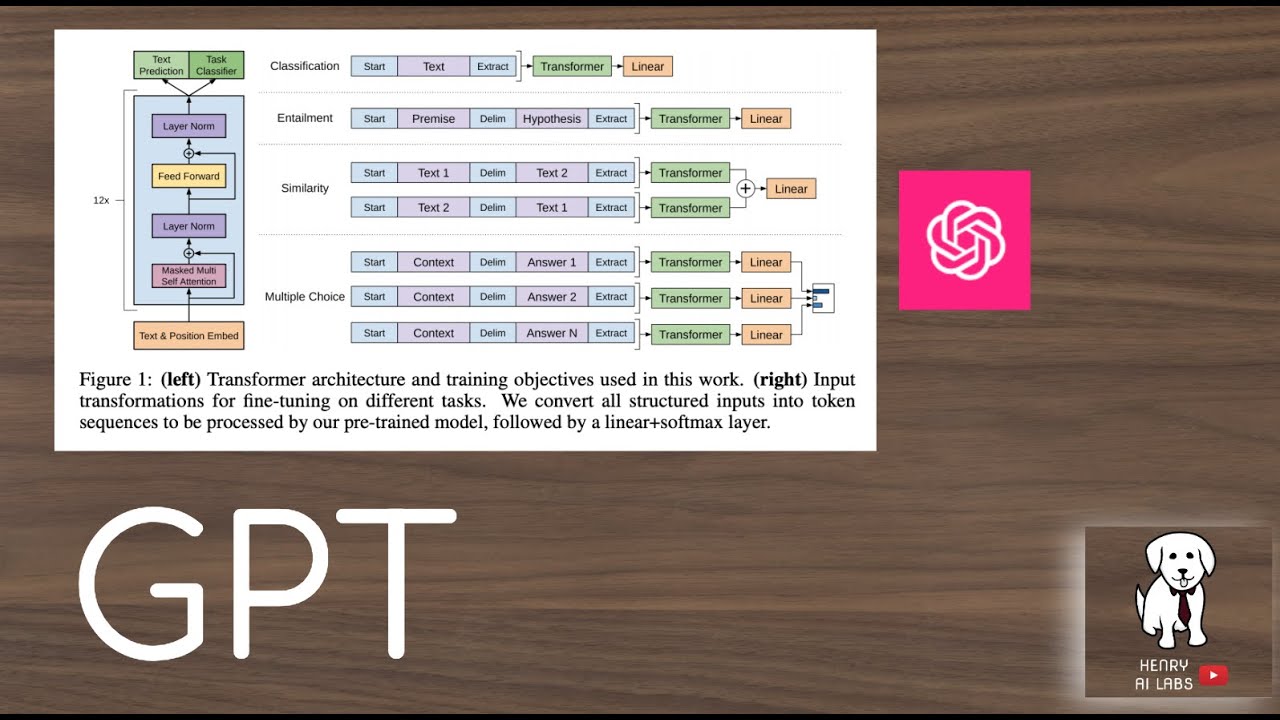

This video explains the original GPT model, “Improving Language Understanding by Generative Pre-Training”. I think the key takeaways are understanding that they use a new unlabeled text dataset that requires the pre-training language modeling to incorporate longer range context, the way that they format input representations for supervised fine-tuning, and the different NLP tasks this is evaluated on!

Paper Links:

GPT: https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf

DeepMind “A new model and dataset for long range memory”: https://deepmind.com/blog/article/A_new_model_and_dataset_for_long-range_memory

SQuAD: https://rajpurkar.github.io/SQuAD-explorer/explore/v2.0/dev/Oxygen.html?model=BiDAF%20+%20Self%20Attention%20+%20ELMo%20(single%20model)%20(Allen%20Institute%20for%20Artificial%20Intelligence%20[modified%20by%20Stanford])&version=v2.0

MultiNLI: https://www.nyu.edu/projects/bowman/multinli/

RACE: https://arxiv.org/pdf/1704.04683.pdf

Quora Question Pairs: https://www.quora.com/q/quoradata/First-Quora-Dataset-Release-Question-Pairs

CoLA: https://arxiv.org/pdf/1805.12471.pdf

Thanks for watching! Please Subscribe!