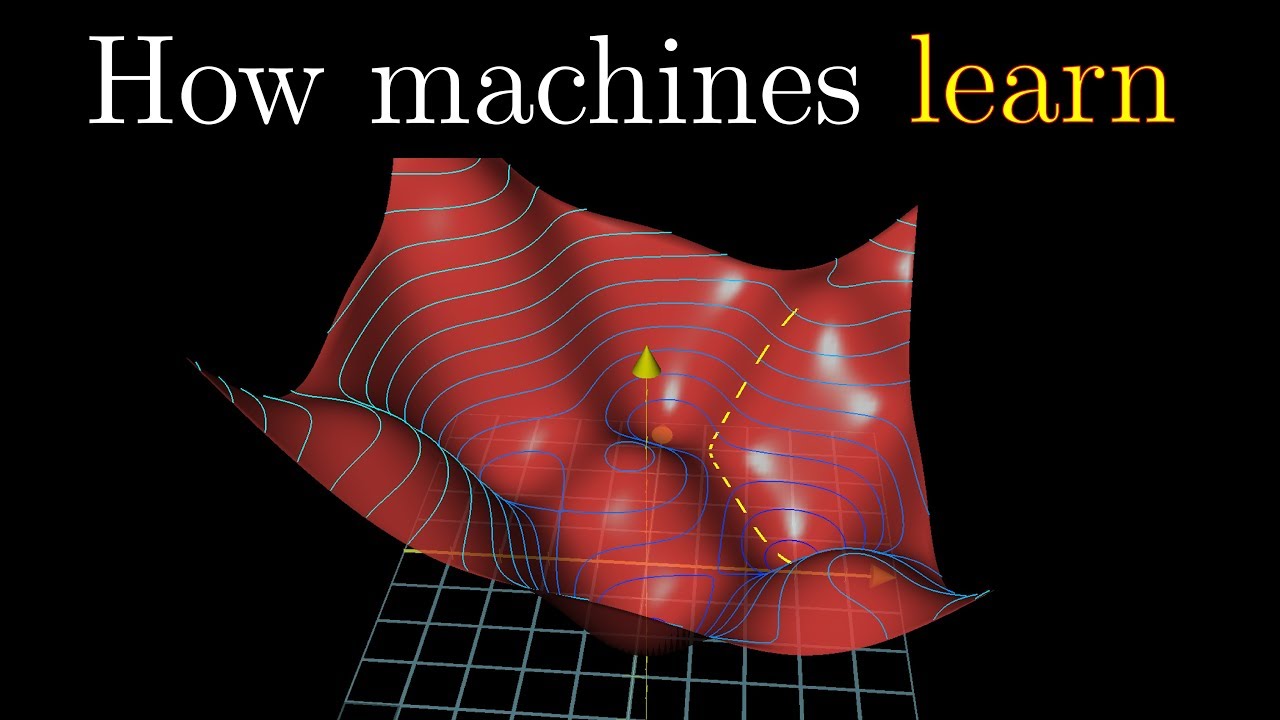

3Blue1Brown

Home page: https://www.3blue1brown.com/

Brought to you by you: http://3b1b.co/nn2-thanks

And by Amplify Partners.

For any early stage ML startup founders, Amplify Partners would love to hear from you via 3blue1brown@amplifypartners.com

To learn more, I highly recommend the book by Michael Nielsen

http://neuralnetworksanddeeplearning.com/

The book walks through the code behind the example in these videos, which you can find here:

https://github.com/mnielsen/neural-networks-and-deep-learning

MNIST database:

http://yann.lecun.com/exdb/mnist/

Also check out Chris Olah’s blog:

http://colah.github.io/

His post on Neural networks and topology is particular beautiful, but honestly all of the stuff there is great.

And if you like that, you’ll *love* the publications at distill:

https://distill.pub/

For more videos, Welch Labs also has some great series on machine learning:

https://youtu.be/i8D90DkCLhI

https://youtu.be/bxe2T-V8XRs

“But I’ve already voraciously consumed Nielsen’s, Olah’s and Welch’s works”, I hear you say. Well well, look at you then. That being the case, I might recommend that you continue on with the book “Deep Learning” by Goodfellow, Bengio, and Courville.

Thanks to Lisha Li (@lishali88) for her contributions at the end, and for letting me pick her brain so much about the material. Here are the articles she referenced at the end:

https://arxiv.org/abs/1611.03530

https://arxiv.org/abs/1706.05394

https://arxiv.org/abs/1412.0233

Music by Vincent Rubinetti:

https://vincerubinetti.bandcamp.com/album/the-music-of-3blue1brown

——————

3blue1brown is a channel about animating math, in all senses of the word animate. And you know the drill with YouTube, if you want to stay posted on new videos, subscribe, and click the bell to receive notifications (if you’re into that).

If you are new to this channel and want to see more, a good place to start is this playlist: http://3b1b.co/recommended

Various social media stuffs:

Website: https://www.3blue1brown.com

Twitter: https://twitter.com/3Blue1Brown

Patreon: https://patreon.com/3blue1brown

Facebook: https://www.facebook.com/3blue1brown

Reddit: https://www.reddit.com/r/3Blue1Brown

Source

Part 3 will be on backpropagation. I had originally planned to include it here, but the more I wanted to dig into a proper walk-through for what it's really doing, the more deserving it became of its own video. Stay tuned!

Would have been nice to have the images in a more accessible format. Expecting anyone following along to be using unix and python is a bit elitist.

10:12 I'm guessing the assumption that Biological Neurons are Binary is based on outdated data, since new evidence shows they aren't

I actually read the first 2 chapters and the last one in the book before finding this video, can't also deny that while being a master student in signal processing, your videos was of great help seeing the PCA, vector and matrix transformation.

00:18:10 Why would we expect the outputs to be random if we are labeling the images? Irrespective of the fact that the labels are incorrect, we are still training the data on the incorrect labels so why would we not expect the original level of accuracy?

15:30 Can we add one more neuron to the last layer representing none of the above? Has anyone done something like this? Or it would be too difficult to train as there are too many cases that an image is not a digit? I am very curious about it.

Many-many thanks to your videos, so clean and robust. And animated yeees….

Many local minima give equal quality was the most surprising part to me! But after some pause and pondering, I think it makes sense; even complex equation has more than one solution that makes its outcomes correct.

So what you're telling me is that machines won't take over the world unless someone tells it to.

Is it just me or the voice of Lisha Li is really orgasmic

I think the second layer looks so weird just because the pixels at the edges are barely ever used so they had no motivation to change.

Do you know a good source for how to make a cost function?

For the mislabelled data…are they all consistently mislabelled? Are all cows labeled as puppies or are different images of cows labeled differently (e.g. cow_1 labeled as a puppy, cow_2 labeled as a fork)?

If they are all labeled the same then it should be the same as original (doesn't matter what we humans call it)

but if they are all randomly labeled differently…what do you mean it was able to achieve the same test accuracy? That after training, if we were to input the data for cow_1 it can predict that it's a puppy, and if we input cow_2 it can predict that it's a fork? i.e. rather than have a categorization that all forks are predicted forks and all non-forks are similarly not labeled forks, it's basically each image <—>label i.e. all images are somewhat independent of each other? And that it wasn't able to pick up an abstraction or generalization and therefore the best it can do is to stay at the level of "propositional logic" and can't go higher.

Ah ok I think I understand better now. That's what you meant by "memorising". Well…can't be helped, you are basically declaring all input data as true so the NN has no choice but to take your word for it that puppies are indeed forks. I wonder what if we introduce uneven random data. Like let's say 80% of cows are correctly labeled and the other 20% are mislabeled, as well as data of negation, that cows are not forks, I wonder what that would do to the hidden layers.

Also it makes a lot of sense the accuracy curve would take longer to converge on the local minima because the input data has so little similarities to each other! A fork, a cow and a puppy are after all very different.

i will create the most powerful ai ever created.

Two things comes to my mind watching this video: "Path of least Resistans" + Positive and Negative Feedback". Why we need to train a long time to be good at anything, and why being open to input from others makes us learn faster, compared to when we reject inputs.

You are great!!! Salute you 👌👌👌

Why do we square the differences to calculate the cost sum?

what you call "cost" is that what is also sometimes referred to as "loss"?

Hi, at minute 15 of this lesson all 784 input pixels are connected to each of the 16 neurons that produce a different image, how is this possible?

The image on which you do the calculations is always the same.

Do the 16 neurons in the second layer have different formulas?

This is driving me crazy. Everything is connected to everything.

I don't understand it. I'm sorry.

Thank you

ric

Some thoughts on the results:

1. 14:01 The weights for the first layer seem to be meaningless patterns when viewed individually, but combined, they do encode some kind of sophisticated pattern detection. That particular pattern detection isn't uniquely specified or constrained by this particular set of weights on the first layer; rather, there are infinitely many ways that the pattern detection scheme can be encoded in the weights of this single layer.

These infinite other solutions can be thought of as the set of matrices that are row-equivalent to the 16x700ish matrix where each row is the set of weights for each of the neurons on this layer. You can rewrite each of the rows as a linear combination of the set of current rows, while possibly still preserving the behavior of the whole NN by performing a related transformation to the weights of the next layer. In this way, you can rewrite the patterns of the first layer to try and find an arrangement that tells you something about the reasoning. Row reduction in particular might produce interesting results!

2. 15:10 I think I understand the reason why your NN produces a confident result – it's because it was never trained to understand what a number doesn't look like. All of the training data, from what I can tell, is numbers associated with 100% confident outputs. You'd want to train it on illegible handwriting, noise, whatever you expect to feed it later, with a result vector that can be interpreted as 0% confidence, by having small equal weights, having all weights to zero, or maybe an additional neuron that the NN uses to report "no number".

Could you make some videos on the other optimization functions like RMSprop and Adam?

13:56 Wow. So the neural network guessed "6" and was wrong. I'll guess … "4"?… or "H"?

Me, before this: I love Python! It seems to be used in Neural Network.

Me(now): OK I quit.

PS: I'm in class-8 LOL.

After watching multiple courses on paid educational sites, reading many forums and articles on the topic, i found this series to be absolutely GOLDEN and cover all the gaps in my intuitive understanding! Thank you! I give it 4 stars, would give it 5 if you animated the 13000-dimensional graph as noted in comment below 😛

Thank you so much for the video!

it is really informative and helpful.

Do you also have some slides created for the video ?

9:56 Someone, please help me with this thing. 3Blue1Brown mentioned that random a random set of weights and biases are assigned initially to the model and the cost function value for that specific set of weights and biases is calculated. From that point, the gradient descent algo must be used to minimize the cost function on a graph that plots cost function values for all possible values of the 13002 weights and biases. How are the cost function values for all possible combinations of values of 13002 weight and biases calculated so that the graph is plotted and minimization of the cost function is done. Am I getting it wrong?

I know nobody will see this, but I just wanted to rant for a bit….I have been coding for about 6 months, and I tried to make my own neural network in JavaScript. I know, I now realize it is way better to code it on Python. Instead of keeping values small, I tried to increase the values for the weights and concurrently scale all the other values up besides the initial image, where I kept it to be an 8 by 8 pixel image where, by hand, I input into an array a 0 if the pixel is white and a 1 if the pixel is black. I feel like a failure now that I have learned some Python and figured out that I could've done this way better and way easier with some nested arrays. Sorry for the downer and the rant, I just felt like I needed to say something

What about the biases?