While the sun is setting on the Kinect, and this blog, there are a few interesting projects we’re going to highlight first. Today’s project is one of those…

The Smart Home future is rapidly arriving – the personal home is getting connected. Lamps, Fridges, Toasters, Stereos; everything these days is available with an internet connection that allows controlling and networking of different devices from a central hub, usually a smartphone app.

I have been working on connecting lamps, LED strips and a bunch of sensors in my room for years now. I can come into my room and lights turn on automatically with different settings depending on weather and time. And when I leave home, they automatically turn off for me. However, I have always had one issue with my whole setup: A good old light switch is much faster and more natural to use than pulling out my phone, opening an app and toggling a switch.

This is why I have been exploring different ways that allow a more natural and intuitive way of interacting with connected appliances.

Introducing IOT-KINECT

The first step was an application written in Processing that interfaces with a Kinect depth sensor and any IOT hub capable of receiving commands via HTTP requests or websockets (in my case that is the brilliant and open source Home Assistant).

…

Next up: Brainy Things …

The exhibition

…

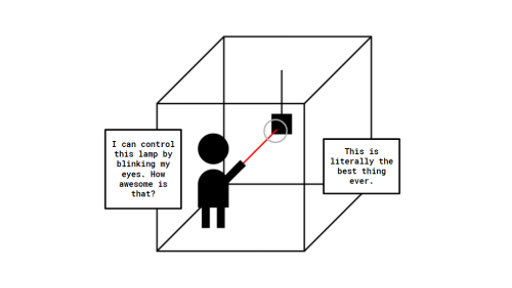

Once the two lamps were fully functional, I modified IOT-KINECT again to communicate with the Blynk Cloud, allowing it to toggle on and off those two very lamps. I then built a little exhibition room using black cloth and a few tables, beautifully hid the Kinect’s cables, and voila:

…

Fantastic.

This project was by far the most interesting of my IoT – endeavours to this day. I learned a lot about brain waves, real space interaction and gesturing. However, it is far from being perfect. Right now, due to the Kinect’s relativeness, the user has to be roughly in the same spot every time they gesture at actuators. The bigger the actuators are defined in IOT-KINECT, the bigger the tolerance for that spot becomes – however, the likeliness of false triggers becomes larger too. By adding more Kinects to the space and defining actuators as actual, 3D objects in virtual space, the interaction could work regardless of the user’s physical location.

In addition, by applying machine learning to higher resolution brain interface data and individual training for a given user, the number of possible, hands-free commands could be increased substantially. So called “mental commands” are certain patterns in one’s brain that an algorithm was trained to recognize as a given command. When you think of the color red, a similar pattern can be read every time – and a model trained to recognize that pattern along with the ones for other colors could for example allow you to point at an LED strip in your home and tint it with your mind alone.

Project Information URL: http://creativecoding2.tumblr.com/post/163657812805/bci-iot-brain-computer-interface-x-internet-of

Project Download URL: [URL]

Project Source URL: [URL]

Greg Duncan

https://channel9.msdn.com/coding4fun/kinect/Kinecting-to-IoT

Source link