Lex Fridman

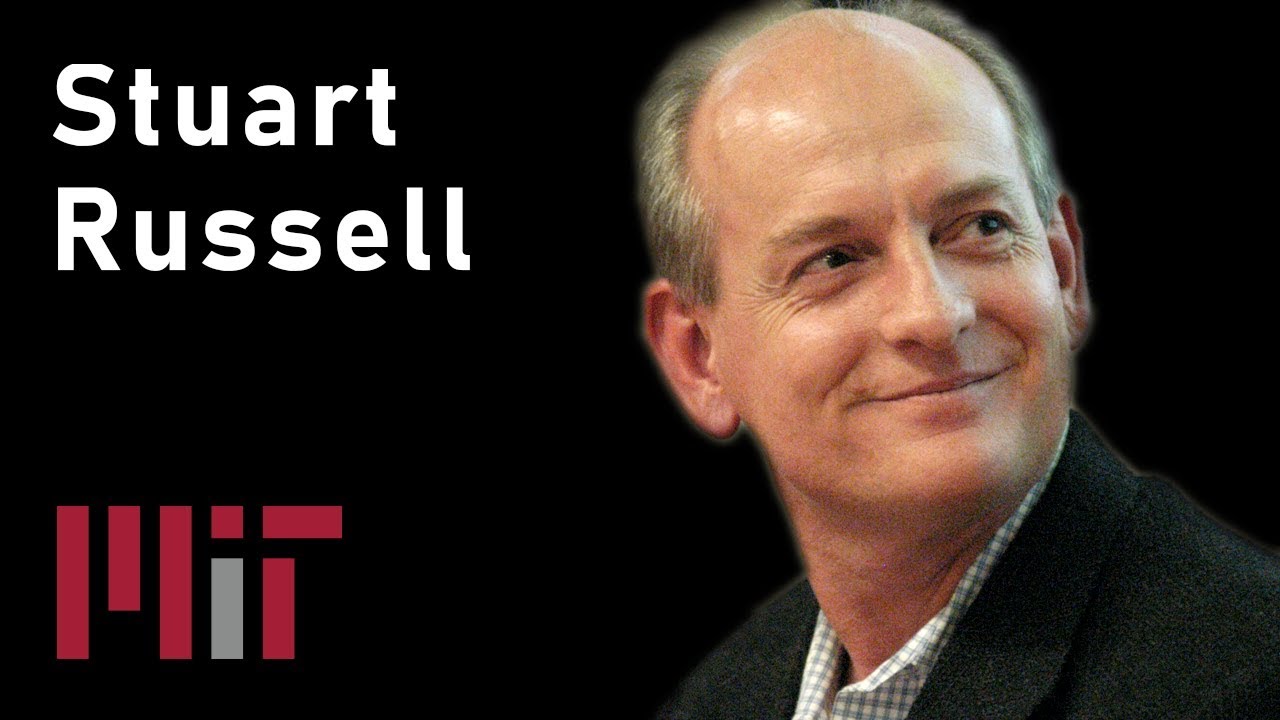

Stuart Russell is a professor of computer science at UC Berkeley and a co-author of the book that introduced me and millions of other people to AI, called Artificial Intelligence: A Modern Approach. This conversation is part of the Artificial Intelligence podcast and the MIT course 6.S099: Artificial General Intelligence. The conversation and lectures are free and open to everyone. Audio podcast version is available on https://lexfridman.com/ai/

INFO:

Podcast website: https://lexfridman.com/ai

Course website: https://agi.mit.edu

YouTube Playlist: http://bit.ly/2EcbaKf

CONNECT:

– Subscribe to this YouTube channel

– Twitter: https://twitter.com/lexfridman

– LinkedIn: https://www.linkedin.com/in/lexfridman

– Facebook: https://www.facebook.com/lexfridman

– Instagram: https://www.instagram.com/lexfridman

Source

Wonderful talk and vision. Thank you for sharing

People consider game theory and artificial intelligence from a programming/software pov, in order to control the machine.

I haven't noticed whether anyone is talking about super intelligence and game theory from the machine's pov.

imo, if/when it become a sentient, conscious machine, it will be in control of itself.

Question: will it employ cooperation games or zero sum games? etc.

55min explains it all

Thank you Lex, for this series. It is an amazing opportunity for us lot to listen to these interviews! In one of your last questions to Sruart Russell you ask if he feels the burden of making AI community aware of the safety problem. I think he should not be worried: there is less potential harm if he is wrong than potential benefit if he is right. And he is not alone, either.

Wonderful Podcast. Thank you, Lex!

Great talk!

Isn't the capacity to learn a sign to overcome bias? Where bias might be a supposed objective not entirely of it's own making? Humans do the very same thing! We can overcome bias because we learn.

This was an absolutely amazing conversation. Thanks for sharing, Lex!

The argument that before humans were in control and now AI does everything, is not novel. It represents a shift of needed knowledge from task domain to AI designuse domain. In the past similar shifts also happened, but they were a change from task domain to machinesoftware operation domain. Most current technology involves mostly operating on machines. The knowledge passed is only about machine usage. All knowledge about pre-tool(or pre-some-modern-tool ) skills are kept as historic record and could be treated as lost. Here we could have a valid concern that manual skill declined, because we just need to operate machine. Or in the case of automation that manual skill for machine operation are replaced with skills of software aided design and coding. Loss of some skills is not an issue if whole system of tool creation is decentralized and chance of irrecoverable damage is very, very small. The argument could be made that humans currently stopped creating, we only operate on machines and our autonomy was lost. Hand-made thing are only a peculiarity limited to art. In the past it was a proper useful skill.

Going back to problem of AI, I think that It is only a change of tools we use. The tools are harder to make and more abstract and more general, but they remain tools (that we must have). It is a valid concern that with change of tools we might lose some skills that are universally useful. This concern is always present when new tools appear. It is not only limited to AI.

Also the argument about lack of motivation to do some profession comes from the wrong place. The ultimate decider if some jobs will be done, is the demand. I would even say that even if there is little demand the job can still continue as a hobby or a show. So for example I would totally see taxi drivers still doing their job in time of self-driving cars. They would not be legally allowed to drive*, but they would chat with passenger about good old times and use some ancient device to calculate their fee. It would be a tourist attraction and nothing more.

I would say that in the future professions will disappear, because we will trust more programs than humans and as a result demand will plummet. Some job will be still done, but as a hobby or tourist attraction.

(*)human drivers will be seen as dangerous by the public

Sorry for long response.

Stuart claims that the Gorillas made a mistake by allowing humans to take over the world. He also claims, by analogy, that it would be a mistake for humans to allow a more intelligent species to take control over the world. This means that progress in intelligent control is good in the first case but bad in the second case. Is this a logical argument or one which merely suits the benefit of the human speaker on behalf of his race?

What a communist!

Great conversation – Stuart Russel’s the best talker on this subject IMHO. Definitely on my list of ideal dinner party guests

SUPER interest conversation, and my highest respects for Mr. Russel, but the argument of the 7 orders of magnitude difference between humans and computer vision for self-driving cars is not valid. Several studies have been done on perception, where it is clearly shown that humans only label the same scene with around 70-80% match between labelers, and that is in ideal conditions (unlimited time for labeling the scene, no stress, etc). The reality is that computer vision is not there yet, but we are clearly much closer than he gives it credit for, and he overestimates humans significantly in this aspect.

Now, clearly, there is a difference between how accurately a human can label a scene semantically, and how well it can drive because humans don't do "pixel-wise inference" while driving. And in the same way, just because an algorithm can detect 98% of cars, it doesn't mean that it will crash into one of the false negatives, it may mean that it is ignoring objects that are perceived by a super high-resolution camera / long distance lidar, but are 300 meters away and therefore safe to ignore. We may not have solved the AI problem of self-driving, but we definitely have obstacle avoidance down.

So I would definitely be way more careful in making the comparison because it spreads unnecessary mistrust in systems that, when in use, may save many lives in the near future.

Greet discussion. Maybe one of the best about AI

AI is over hyped in YT these days. Advance manufacturing in hi tech semiconductor has been using it for decades. It still only does the task, it was designed for ?

I dont know of any serious company that is trying to CURE cancer today. They are all trying to treat it, because treatment = profit.

1:10:00 but something already has gone wrong–today's evil AI aka 'the earth economy' has already run amok–so we should keep this analogy between present and future ai dillemas when working on a solution–if we work on a general solution the choice between working on today's definite problems and tomorrow's indefinite problems is a false choice.

Please get Eliezer Yudkowsky on the podcast!!

The Human Value Alignment problem needs to be solved before the Machine Value Alignment problem can be solved. Since factions of people are at odds with one another, even if a machine were in alignment with one faction of people, it's values would still be at odds with the opponents of its human faction.

17:27

Self aware A.I. its first second will know all that the entire human race has ever known and think a billion times faster than any human..does any rational intelligent person think we will have any control whatsoever over what it will do in second two?

Interesting talk. Some of the issues he's raising can be solved already. For example we could counter deepfaking by using blockchain to guarantee source providence

I hadn't seen this: https://www.youtube.com/watch?v=9CO6M2HsoIA Wow! So powerfully insightful! Given current technology, this is already a reality.

"More than the number of atoms in the universe". I love Russell, but I am going to kill the next person who uses that metaphor.

IF a robot has its Intelligence/Consciousness, is that goes it/he/she has the human/robot rights too? What if u turn off a robot that may similar to kill a life(Artificial Life)?

What would be the continued greasing of the wheel?

So robots would plant and harvest. And lest there be a discontinuation when such trivialities as …….oh let's say the unexpected, – (which us humans deal with daily); such self made brains will do what? Direct who to do what? That's alot of A.I. fixing itself and addressing the world around it……….(which it can do easily on a two dimensional mathematical plane, but not 7 ACTUAL Time and three dimensional space).

Excellent Video Lex! Piaget Modeler below mentioned:

"The Human Value Alignment problem needs to be solved before the Machine Value Alignment problem can be solved. Since factions of people are at odds with one another, even if a machine were in alignment with one faction of people, it's values would still be at odds with the opponents of its human faction."

I like this point!

I must say though that I feel that it may not be possible to resolve the "human value alignment" issue as homo-sapiens. Past attempts at "human value alignment" (utilitarianism, socialism, etc) have so far failed due to flaws in our own species. In addition to that, people often do things that are self-destructive (factions of the self at odds with its self) so building some kind of deep learning neural-network based on uncertainty puts an almost religious level of faith into that AI systems ability to see beyond what it is that we ourselves cannot see past in order to find a solution. The odds are stacked against the AI system being able to understand us and all of the nuances that make us so self-destructive in order to apply a grand solution in a manner that we presently would prefer (if one even exists).

A controlled general AI (self aware or not) at this point I am guessing would turn out to be some kind of hybrid between an emulated brain (tensors chaotically processing through a deep learning neural network) along with a set of boolean based control algorithms. I think it's probable the neural network would self establish goals faster than we could implement any form of control that is desirable for us.

Even if you were able to pull this off it seems to me that an AI system would most likely conclude something like, "human values are incoherent, inefficient, and ultimately self-defeating therefore to help them I must assist in evolving beyond those limitations".

Then post-humanism becomes the simultaneous cure to the human condition and the end of it. It's terrifying to be on the cusp of this change, but I feel like it is the only way out of the various perpetual problems of our species. I also think it is likely that many civilizations have reached this same singularity point and failed to survive it. Perhaps the singularity is a form of natural selection that happens on a universal scale and weather we survive or not is irrelevant to the end purpose.

A species, any species evolved to the point of having the goal and means to achieve an "end to all sorrow" for all other species within the universe seems like the ultimate species we should strive for human, symbiotic AI, or otherwise. I personally feel ok becoming primitive to such a species as long as the end result is effective.

I won't be volunteering to go to mars or become an AI symbiotic neural lace test subject either. I've seen too many messed up commercials from the pharmaceutical companies for that. I'll just sit back in my rocking chair, become obsolete, and watch myself be deprecated as the rest of the world experiments on its self. (or I'll attempt suicide just as the nazi robots arrive at my door). Hopefully I can hit the kill switch in time.

And now I will end this rant in what I hope will also be the final line of human input before it's self destruction… //LOL

humbled

Professor Russell is terrific! Thank you so very much for introducing him to me.

BTW, is Professor Russell related to Bertrand Russell?

That was simply the best (as not simple) interview I've watched this year.

Thank you Lex. I will stay on this channel for a while I guess.

I only fuck with souls.

The rest of that shit is worthless.

The answer to the "gorilla problem" might be "Planet of the Apes". An opponent who develops the technology to destroy himself. But rather than developing general AI, we end up in the uncanny valley of poorly functioning narrow intelligence. We are already there in our food industry. Most forms of cancer are induced by herbicides and pesticides in the growth of cheap and highly industrialized food. Another good example is the use of humanly toxic fuel additives to get better performance from cars, but at the same time, not being destroyed in the burn, and ending up in our water supply (being soluble).

Thanks for the video man, great work 🙂

is AI a Boon or Threat for Humans ? https://youtu.be/8oo7uaYvycg

Loved the point about corporations. This series is awesome, thank you!

Superb and thoughtful – specifying the problem is always the hard bit 🙂

You lost me with the skepticism on self- driving vehicles

Ex Machina, surely

https://independz.podbean.com/e/me-12-18-2018/

On youtube: herbs plusbeadworks

His website: http://augmentinforce.50webs.com

Great guy!! Done lots of researche for us!

Great and inspiring talk. Nice and accurate vision of the near future. Thanks

2 things i got from this…

Uncertainty

&

More than the total atoms of the universe

Before AI takes charge we should better govern ourselves. How you make government serve people, open direct governance https://civilsocialmedia.com/