Steve Brunton

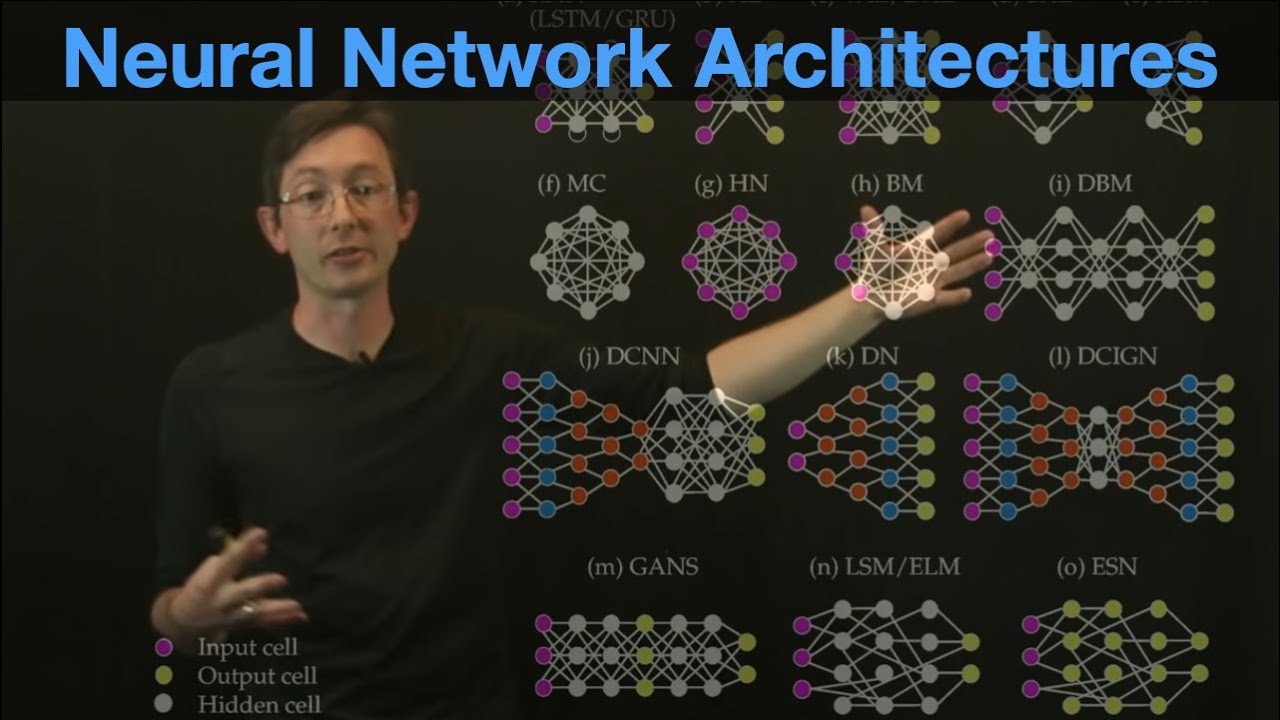

This lecture describes the wide variety of neural network architectures available to solve various problems.

Book website: http://databookuw.com/

Steve Brunton’s website: eigensteve.com

This video is part of a playlist “Intro to Data Science”:

https://www.youtube.com/playlist?list=PLMrJAkhIeNNQV7wi9r7Kut8liLFMWQOXn

Source

good… more…..

i like this video, gg guys ; )

These were most productive 9 minutes. Great explanation on the architectures.

Ali G:

Yo, check it, if you is small…

give lectures on the activation structures pls

Didn't know about that encoder thingy. Got me thinking about stuff. Is the right side mirrored so it does the opposite of the left side or are they trained separately?

sarah connor is coming for you

Still no neural network in the heads. But yes, there's a tonns of new names, that you of course want to know to explain that you know what is neural network

This is the best short intro to this topic I've seen. Thanks!

How can I get more Neurons into my brain?

Recommended gang, where you at?

Thank you for your video!

Seeing your example for principal values decomposition made neural networks much clearer to me than anything else I had seen till now.

It allowed me to connect this to SVD-based linear modeling I used almost 10 years ago to create simplified models of visual features seen in fluid dynamics.

I did not expect how much easier this suddenly seemed when it connected to what I already knew.

forget neural networks, this guy figured out that it's better if you stand behind what your presenting instead of in front of it. mind blown

Best. I love your lecture. It explains problem in a simple way. Thank you so much.

From Youtube's recommendation algorithm 😂 But Cool!

Hey I just wanted to say thank you for making this video. I found it really helpful!

I particularly enjoyed your presentation format, and the digestible length. About to watch a whole bunch more of you videos! 🙂

I'd be interested in a vid on information bottleneck theory.

Thank you…

Is it artificial though? What makes ours in our brains non-artificial but ones we create are artificial? Yah know?? Really think about it…

Autoencoders sound great for data compression and storage (presumably thats what you were getting at), but arent neural networks interpretive?…. that is to say, probabilistic? Sounds like data corruption is not only easy, not only guaranteed as an artifact of the system, but not even consistent or repeatable as the network grows and learns.

Very impressive presentation, thanks a lot!

Youtube just reminded me that I'm retarded

Why is output and memory colored the same?

wow! how do you visualize these information do you have the iron-man technology?

0:39 – "And it does some mathematical operation on you, to give you some output. Why?"

Thanks for sharing Steve

Please upload a tutorial making of this video I will like to replicate your format for educational purposes

I clicked on this link because I thought he was going to talk about everything in the overview.

Thanks for this explanation

This was massively helpful as an intro! When my question is just "yes but how does this ACTUALLY work", you either get pointlessly high level metaphors about it being like your brain, or jumping straight into gradient descent and all the math behind training. A+ video, thanks.

Nice pun 1:41

Don't wear black with a black background without a proper back light

I think nowdays "people" use RELU much more often

i found an article about being able to fool the learning and making to give the wrong output. in real life also a human can be fooled in to thinking the wrong concept

Good overall neural net explanation!

I have been looking for this content a really long time. Thanks so much.

Recorded with a potato.