Chris Hawkes

► SPONSOR ◄

Check now if your .TECH is available! Link – https://go.tech/chrishawkes

Use Code chris.tech at Checkout for a special 80% OFF on 1 & 5 Year .TECH Domains!

Linode Web Hosting ($20.00 CREDIT)

http://bit.ly/2HsnivM

🎓 If you’re learning web development, check out my latest courses on my website @ https://codehawke.com/ 🎓

— Why OpenAI GPT-3 Is Mostly Hype —

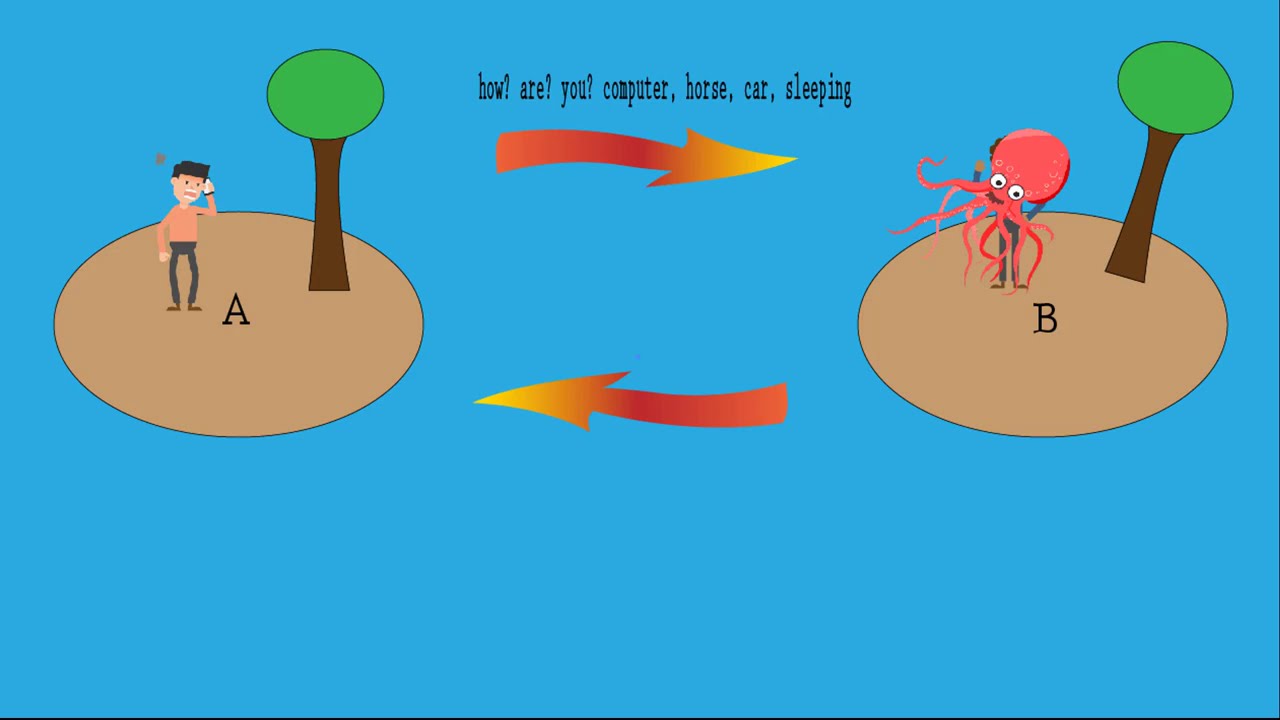

In this video I’m giving my thoughts to OpenAI’s new GPT-3 Natural Language Processing (NLP) API. Some of what it does is impressive, but I think we’re far from this type of product being able to take our jobs as programmers.

Article Cited:

https://openreview.net/pdf?id=GKTvAcb12b

Twitter – http://bit.ly/ChrisHawkesTwitter

LinkedIn – http://bit.ly/ChrisHawkesLinkedIn

GitHub – http://bit.ly/ChrisHawkesGitHub