Intelligent Robot Motion Lab

Alec Farid PhD Defense: Jan 25, 2023

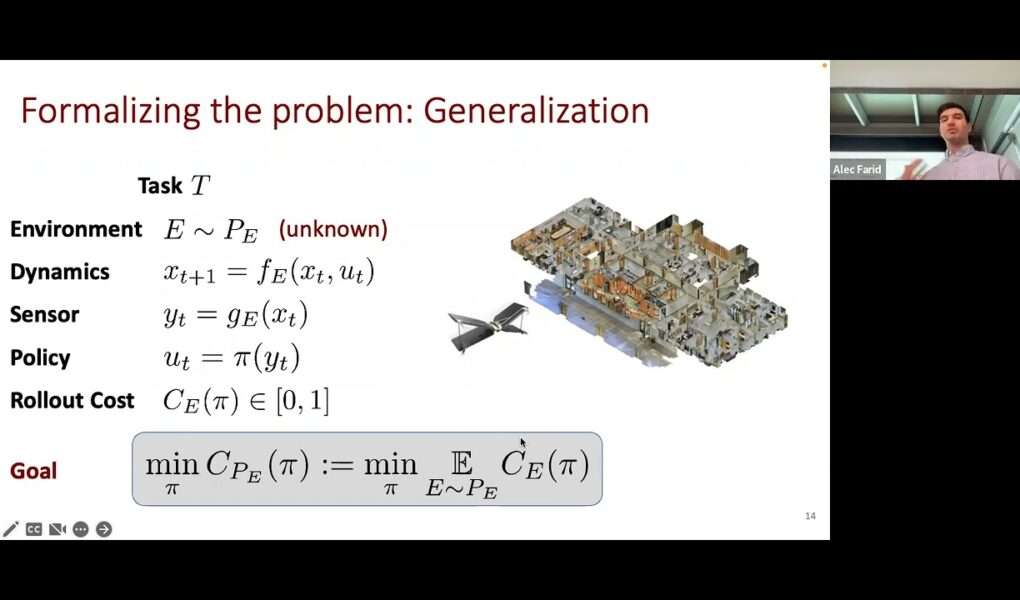

Provably Safe Learning-Based Robot Control via Anomaly Detection and Generalization Theory

How can we ensure the safety of a control policy for a robot that operates using high-dimensional sensor observations such as vision (e.g., an autonomous drone or a home robot)? Machine learning techniques provide an appealing option because of their ability to deal with rich sensory inputs. However, policies that have learning-based components as part of the perception and control pipeline typically struggle to generalize to novel environments. Consider a home robot which is trained to find, grasp, and move objects using thousands of different environments, objects, and goal locations. It is inevitable that such a complex robot will encounter new settings for which it is unprepared. State-of-the-art approaches for synthesizing policies for this robotic system (e.g., based on deep reinforcement learning or imitation learning) generally do not provide assurances of safety, and can lead to policies that fail catastrophically in new environments.

In this talk, we will present techniques we have developed to analyze the safety of robotic systems when they are deployed in new and potentially unsafe settings. We use and develop tools from generalization theory in order to leverage statistical guarantees for learning in robotics to make progress in two key research directions. (i) We provide methods for guaranteed detection of when a robotic system is unprepared for the setting it is operating in and could lead to a failure. With this knowledge, an emergency maneuver or backup safety policy can be deployed to keep the robot safe. (ii) We also develop performance bounds for settings which are out-of-distribution with respect to the training dataset. In both cases, we apply the techniques on challenging problems including vision-based drone navigation and autonomous vehicle planning and demonstrate the ability to provide strong safety guarantees for robotic systems.

Source