sentdex

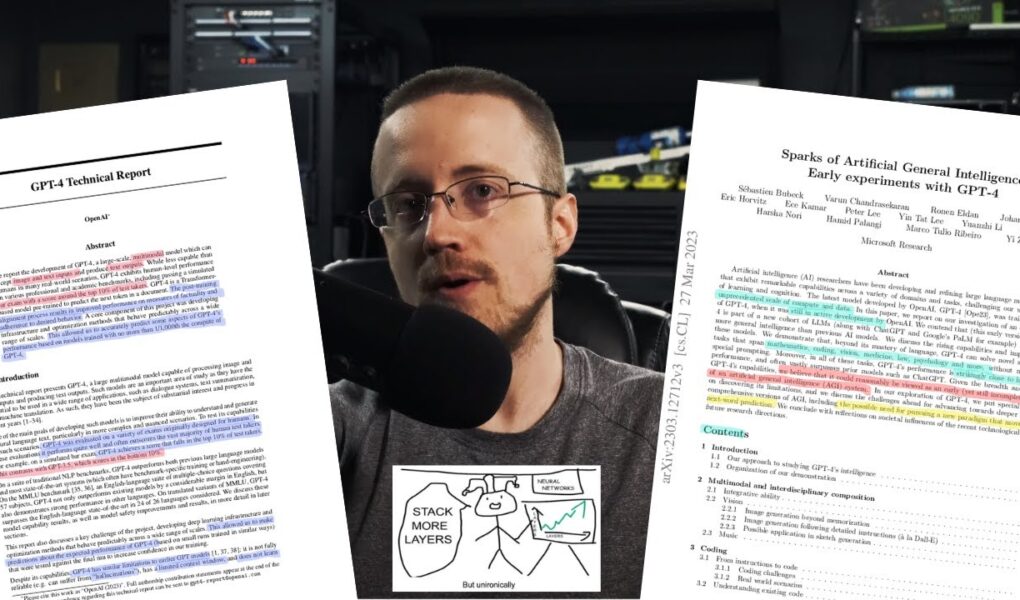

An in-depth look into the current state of the art of Generative Pre-trained Transformer (GPT) language models, with a specific focus on the advancements and examples provided by OpenAI in their GPT4 Technical Report (https://arxiv.org/abs/2303.08774) as well as the Microsoft “Sparks of AGI” Paper (https://arxiv.org/abs/2303.12712).

Neural Networks from Scratch book: https://nnfs.io

Channel membership: https://www.youtube.com/channel/UCfzlCWGWYyIQ0aLC5w48gBQ/join

Discord: https://discord.gg/sentdex

Reddit: https://www.reddit.com/r/sentdex/

Support the content: https://pythonprogramming.net/support-donate/

Twitter: https://twitter.com/sentdex

Instagram: https://instagram.com/sentdex

Facebook: https://www.facebook.com/pythonprogramming.net/

Twitch: https://www.twitch.tv/sentdex

Contents:

00:00 – Introduction

01:31 – Multi-Modal/imagery input

05:44 – Predictable scaling

08:15 – Performance on exams

15:07 – Rule-Based Reward Models (RBRMs)

17:53 – Spatial Awareness of non-vision GPT-4

20:38 – Non-multimodel vision ability

21:27 – Programming

25:07 – Theory of Mind

29:34 – Music and Math

30:44 – Challenges w/ Planning

33:25 – Hallucinations

35:04 – Risks

38:01 – Biases

44:55 – Privacy

48:23 – Generative Models used in Training/Evals

51:36 – Acceleration

57:07 – AGI