Allen Institute for AI

Vered Shwartz

Title: “Acquiring Lexical Semantic Knowledge”

Abstract:

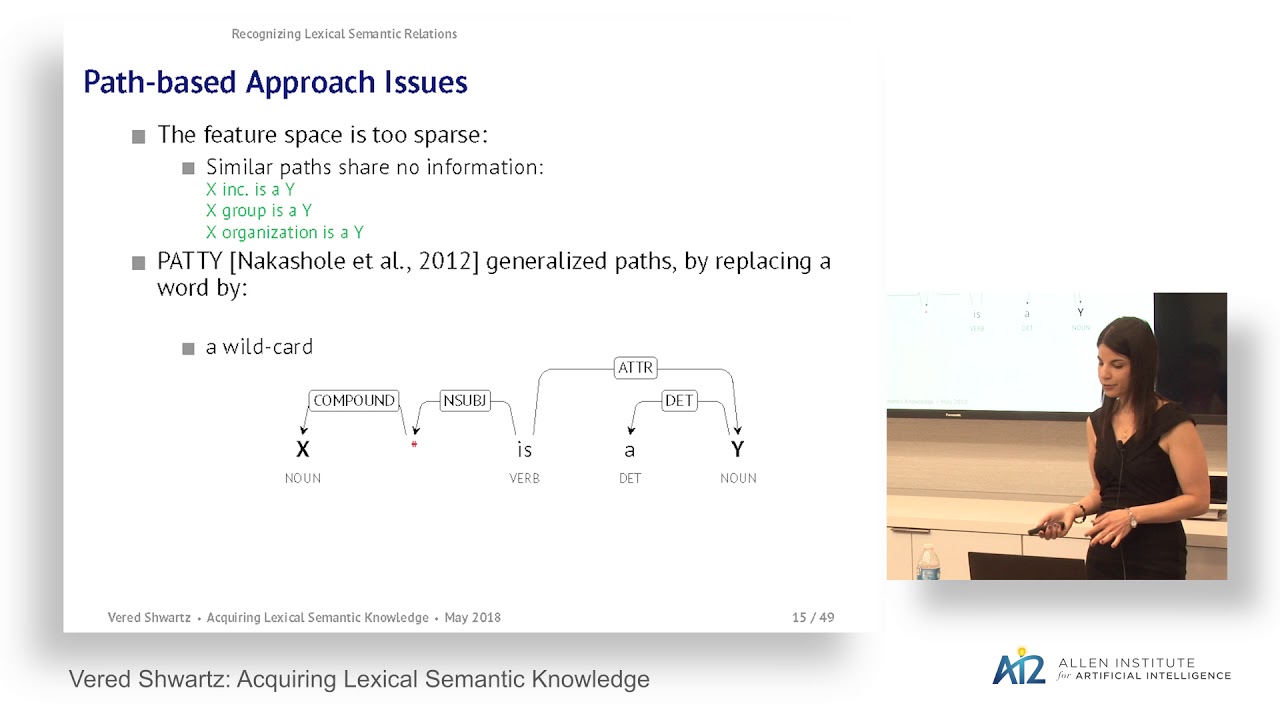

Recognizing lexical inferences is one of the building blocks of natural language understanding. Lexical inference corresponds to a semantic relation that holds between two lexical items (words and multi-word expressions), when the meaning of one can be inferred from the other. In reading comprehension, for example, answering the question “which phones have long-lasting batteries?” given the text “Galaxy has a long-lasting battery”, requires knowing that Galaxy is a model of a phone. In text summarization, lexical inference can help identifying redundancy, when two candidate sentences for the summary differ only in terms that hold a lexical inference relation (e.g. “the battery is long-lasting” and “the battery is enduring”). In this talk, I will present our work on automatic acquisition of lexical semantic relations from free text, focusing on two methods: the first is an integrated path-based and distributional method for recognizing lexical semantic relations (e.g. cat is a type of animal, tail is a part of cat). The second method focuses on the special case of interpreting the implicit semantic relation that holds between the constituent words of a noun compound (e.g. olive oil is made of olives, while baby oil is for babies). .

Recommendable! Touches some pitfalls of natural language processing

very nice work, but i wonder how much of Stanford Core NLP accomplished the tasks described in this . i'm researching how one could cull deeper, unseen information about words and how the compositionality principle applies all the way past sememes even, the last tail of foundational research done in this area of infinitesimal lexical semantics, with Trier at MIT positing sememes as the second most basic recognized level of semantic meaning. I'm positing that there is a deeper still beholden to the compositionality principle of semantics. I just gotta keep hacking away at the hotspots of subtlety showing the finer shades of meaning between two+ words reveals a further theoretical 'particle' at play to lift an apropos analogy from particle physics. I'm arguing that this phenomenon is akin to quarks being what kinda granularity I'm trying to find evidence of. But we haven't seen anything that would pose quarks even exist. Yet they're the fundamental building blocks of all the matter in the universe. It's hyper 'diamond cutting' to help nudge lexsem along its research path. It couldn't hurt right?