Eric Normand

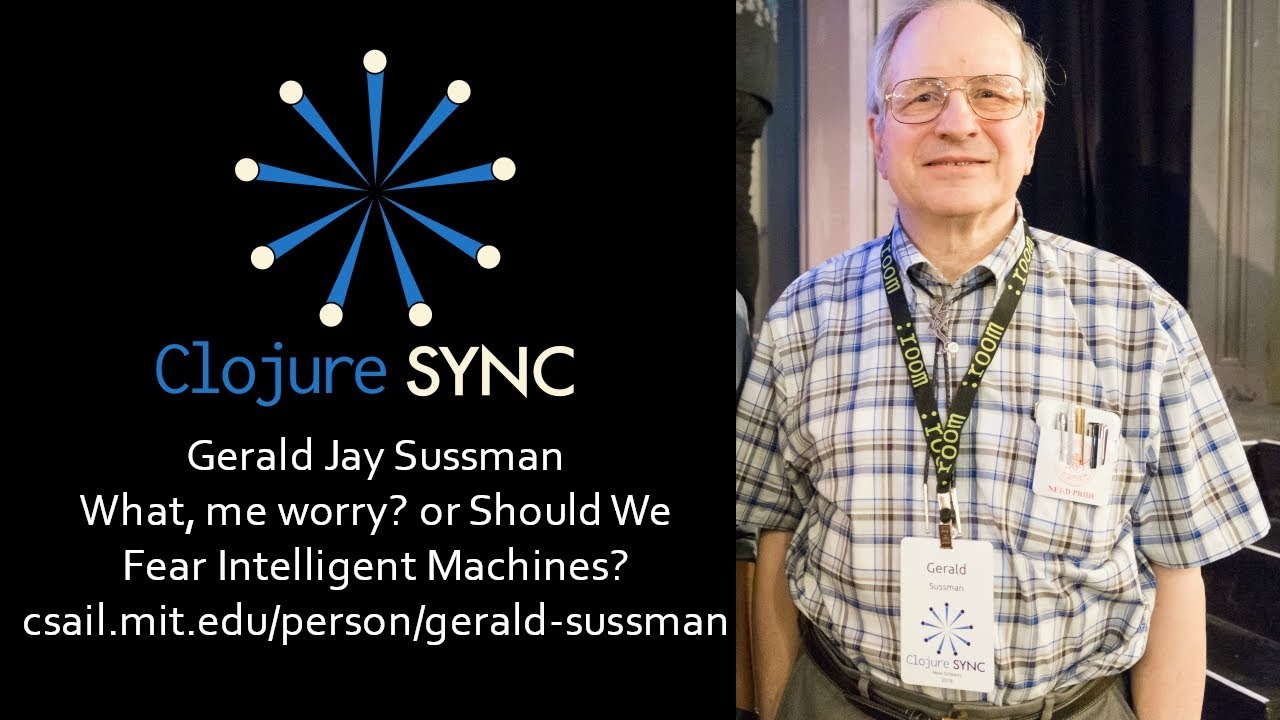

Recorded at #ClojureSYNC 2018, New Orleans.

Dr. Sussman spoke about creating AI that can explain their behavior.

ABSTRACT

Recently there has been a new round of concern about the possibility that Artificial Intelligence (AI) could get out of control and become an existential threat to humanity. I actually know something about AI: I think these fears are a bit overblown.

But with the recent explosive application of AI technology we are faced with a problem: a technology so powerful and pervasive can have both beneficial and harmful consequences. How can we ensure that applications of this technology are constrained to provide benefits without excessive risk of harm? Although this is primarily a social, political, and economic issue, there are also significant technical challenges that we should address.

I will try to elucidate this rather murky area. I will argue that autonomous intelligent agents must be able to explain their decisions and actions with stories that can be understood by other intelligent agents, including humans. They must be capable of being held accountable for those activities in adversarial proceedings. They must be corrigible. The ability to explain and modify behavior may be supported by specific technological innovations that I will illustrate in simplified cases. For us to have confidence in these measures the software base for such agents must be open and free to be examined by all and modified, if necessary, to enhance good behaviors and to ameliorate harmful behaviors.

Source