AI LA

On October 25th, 2018 the AI LA Community hosted their inaugural symposium.

As Artificial Intelligence (AI) begins to percolate into our everyday lives, we must take a step back to think about the effects of such technologies on our lives.

How does AI embody our value system?

Whose interests are advanced by an AI system?

Do AI systems learn humanly intuitive correlations? If not, can we contest the system?

We aim to explore these pressing normative questions to deep dive into AI + Society. Specifically, we will discuss the questions AI raises regarding bias, ethics, and privacy, and we will explore what a fair, accountable, and transparent AI system looks like.

—-

Lightning Talk: Fairness, Accountability, and Transparency in AI for Allocating Housing to the Homeless

Recently, automated data-driven decision-making systems have enjoyed a tremendous success. These algorithms are increasingly being used to assist socially sensitive decision-making. Yet, these automated tools may result in discriminative decision-making, treating individuals unfairly or unequally based on membership to a category or a minority.

Motivated by the problem of allocating housing to the homeless and using detailed historical data, we show that standard ML techniques result in discriminative policies and propose resource allocation algorithms that are more closely aligned with the vision of policy-makers, ensuring fairness, accountability, and transparency while maximizing efficiency of resource utilization.

Lightening Talk: Ethical Preferences with Autonomous Agents

The future will see autonomous agents acting in the same environment as humans, over extended periods of time, in areas as diverse as driving, assistive technology, and health care. In this scenario, such agents should follow moral values and ethical principles (appropriate to where they will act), as well as safety constraints. How can safety constraints, moral values, and ethical principles be embedded in artificial agents? We discuss an example of how CP-nets, an AI framework for reasoning about preferences, can be used as a computational model of ethical preferences.

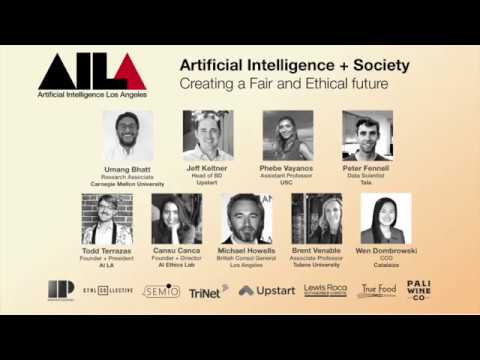

Speakers

Phebe Vayanos is Assistant Professor of Industrial & Systems Engineering and Computer Science at the University of Southern California and Associate Director of the Center for AI in Society at USC.

K. Brent Venable is a professor of Computer Science at Tulane University and Research Scientist at the Florida Institute for Human and Machine Cognition (IHMC).

Michael Howells is a British diplomat and Consul General in Los Angeles.

Jeff Keltner is head of Business Development at Upstart.

Cansu Canca – is the founder and director of the AI Ethics Lab, where she leads teams of computer scientists and legal scholars to provide ethics analysis and guidance to researchers and practitioners.

Wen Dombrowski MD, MBA is a physician and technology executive that has been actively engaged in Science and Technology Ethics and Policy for two decades.

Dr. Peter Fennell is a Data Scientist at Tala where he uses A.I. to build credit and fraud models for Tala’s global markets.

Host

Todd Terrazas – AI LA Founder & President

Moderator

Umang Bhatt is a graduate student in Electrical and Computer Engineering at Carnegie Mellon University.

Source