Michigan Robotics: Dynamic Legged Locomotion Lab

The online autonomous navigation and semantic mapping experiment presented above is conducted with the Cassie Blue bipedal robot at the University of Michigan. The sensors attached to the robot include an IMU, a 32-beam LiDAR and an RGB-D camera. The whole online process runs in real-time on a Jetson Xavier and a laptop with an i7 processor.

Video at 1X the full time (https://youtu.be/N8THn5YGxPw)

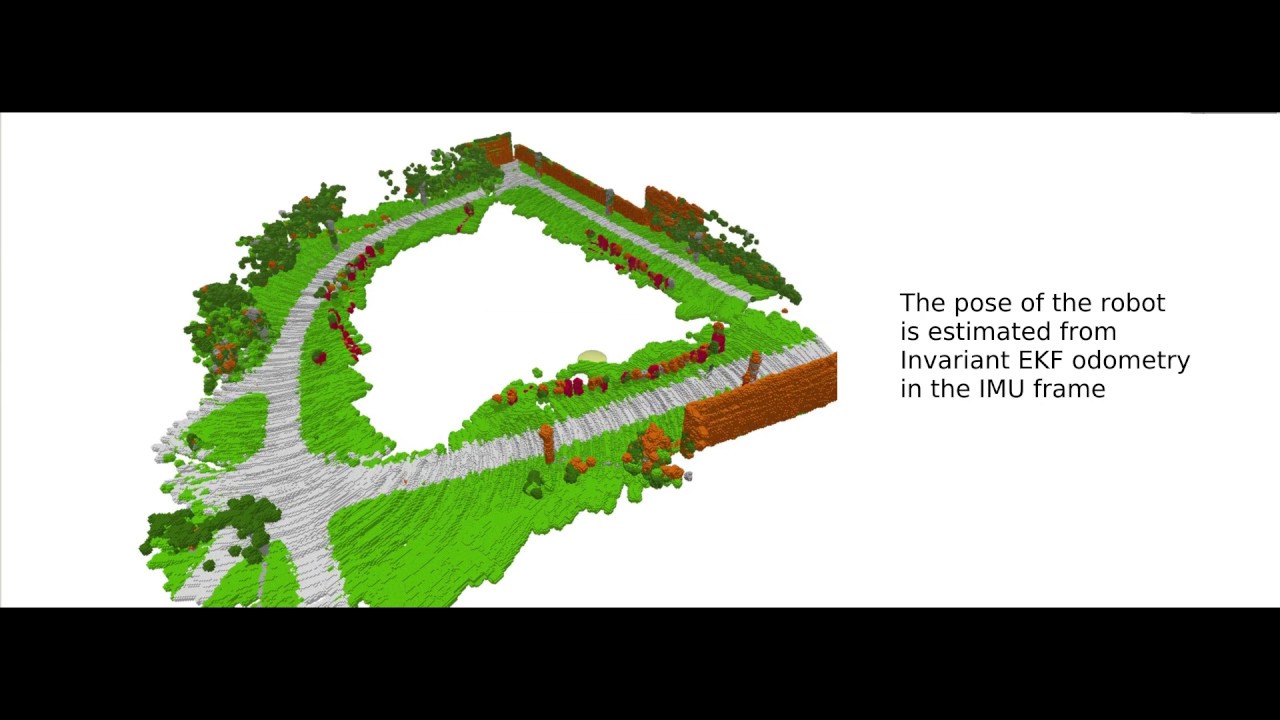

The overview of the software is as follows: We first segment images from the RGB-D camera with a fast neural network. Then we use LiDAR-camera extrinsic calibration to query the prior semantic labels by projecting the point cloud onto a synchronized segmented image. The resulting semantic labels are noisy due to the small training set used for the segmentation neural network and motion blur of the camera, the latter being accentuated by the low evening light. The noisy labels are fused into a 3D semantic occupancy map through Bayesian spatial kernel smoothing, using robot poses estimated by Invariant-EKF odometry. From this 3D semantic map, we generate a 2D occupancy map based on whether the location is walkable (in this case, the sidewalk semantic class) or not. This 2D occupancy map is forwarded to the online path planner, which generates waypoints for Cassie’s gait controller.

Remark: The resulting map is so precise that it looks like we are doing real-time SLAM (simultaneous localization and mapping). In fact, the map is based on dead-reckoning via the InvEKF. We recommend all groups working with legged robots to adopt this form of the extended Kalman filter for their navigation pipeline; for more information, see (https://gtsam.org/2019/09/18/legged-robot-factors-part-I.html), the publication below and the Wikipedia page (https://en.wikipedia.org/wiki/Invariant_extended_Kalman_filter).

Relevant Publications

i) Lu Gan, Ray Zhang, Jessy W. Grizzle, Ryan M. Eustice, and Maani Ghaffari Bayesian Spatial Kernel Smoothing for Scalable Dense Semantic Mapping, arXiv preprint arXiv:1909.04631 (https://arxiv.org/abs/1909.04631), 2019, Code: BKI Semantic Mapping (https://github.com/ganlumomo/BKISemanticMapping) and Segmentation/PointCloud Generation (https://github.com/UMich-BipedLab/SegmentationMapping).

ii) Ross Hartley, Maani Ghaffari, Ryan M. Eustice, Jessy W. Grizzle, Contact-Aided Invariant Extended Kalman Filtering for Robot State Estimation,(https://arxiv.org/abs/1904.09251) In Press, International Journal of Robotics Research, 2019. Code: Software (https://github.com/RossHartley/invariant-ekf)

iii) Jiunn-Kai Huang and Jessy W. Grizzle, Improvements to Target-Based 3D LiDAR to Camera Calibration (https://github.com/UMich-BipedLab/extrinsic_lidar_camera_calibration/blob/master/LiDAR2CameraCalibration.pdf) Code (https://github.com/UMich-BipedLab/extrinsic_lidar_camera_calibration)

iv) Yukai Gong, Ross Hartley, Xingye Da, Ayonga Hereid, Omar Harib, Jiunn-Kai Huang, and Jessy Grizzle, Feedback Control of a Cassie Bipedal Robot: Walking, Standing, and Riding a Segway (http://web.eecs.umich.edu/faculty/grizzle/papers/Feedback_Control_of_a_Cassie_Bipedal_Robot__Standing_and_Walking_Final.pdf) American Control Conference, June 2019 Video(https://youtu.be/UhXly-5tEkc), Controller Software (https://github.com/UMich-BipedLab/Cassie_FlatGround_Controller)

Video at 1X the full time (https://youtu.be/N8THn5YGxPw)