Primoh

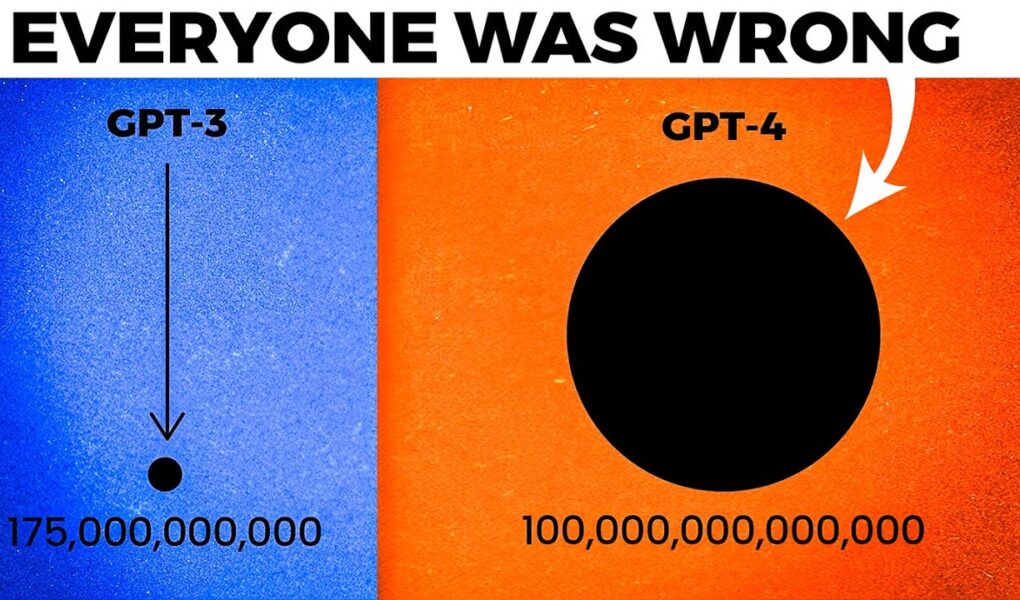

GPT-4 was thought to be a singular trillion+ parameter model, but in reality, it’s something completely different.

They use a Mixture of Experts (MOE) Transformer Model to scale up. Interestingly, the paper about it was written by Google in 2021-2022, but it was successfully implemented and productionized by OpenAI for GPT-4.

—

Apple’s AI Strategy: https://www.youtube.com/watch?v=1iU9j7mjqVg

—

#gpt4 #ai #programming

OpenAI has integrated GPT4 into ChatGPT, making it basically ChatGPT 4. It’s a lot better than GPT 3.5 but it costs more to use per API call, and to use it in ChatGPT, it costs $20 a month. This is the truth about GPT-4 and GPT4 architecture which was hidden for a while, but it’s finally been leaked. It tells us how GPT-4 was trained, how ChatGPT was created, and more.

This is basically GPT-4 explained in 2 minutes. 🙂

—

Sources:

https://thealgorithmicbridge.substack.com/p/gpt-4s-secret-has-been-revealed

https://www.semianalysis.com/p/gpt-4-architecture-infrastructure

—

Music from Uppbeat(free for Creators!):

https://uppbeat.io/t/pryces/aspire

License code: MYVTHFIAWSQ8G4TA