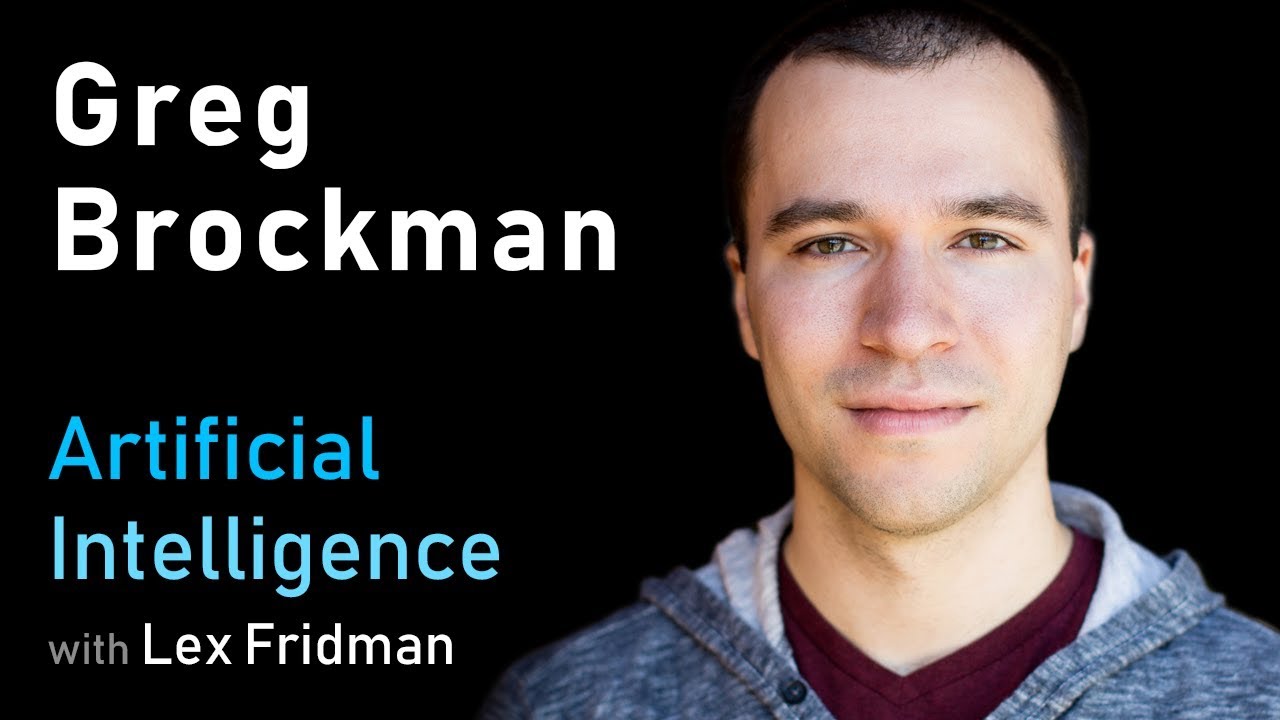

Lex Fridman

Greg Brockman is the Co-Founder and CTO of OpenAI, a research organization developing ideas in AI that lead eventually to a safe & friendly artificial general intelligence that benefits and empowers humanity.

This conversation is part of the Artificial Intelligence podcast at MIT and beyond. Audio podcast version is available on https://lexfridman.com/ai/

INFO:

Podcast website: https://lexfridman.com/ai

Course website: https://deeplearning.mit.edu

YouTube Playlist: http://bit.ly/2EcbaKf

CONNECT:

– Subscribe to this YouTube channel

– Twitter: https://twitter.com/lexfridman

– LinkedIn: https://www.linkedin.com/in/lexfridman

– Facebook: https://www.facebook.com/lexfridman

– Instagram: https://www.instagram.com/lexfridman

– Medium: https://medium.com/@lexfridman

Source

This was a thought-provoking conversation about the future of artificial intelligence in our society. When we're busy working on incremental progress in AI, it's easy to forget to look up at the stars and to remember the big picture of what we are working to create and how to do it so it benefits everyone.

This ‘are we living in a simulation’ question is really bugging you…

eh Lex? 🙂

The general solution to most hobbyists only having a few saws and drills at home isn't for the government to set up CNC routers all over the country, but for the hobbyist to join a Makerspace.

In some ways renting GPU time as a collective is easier then setting up a Makerspace because the resources can be anywhere on the internet, for instance where power and cooling are least expensive.

Until electricity and microprocessor fabrication are as cheap as ideas, there will always be scarcity of both.

Can we get some time stamps my brutha?

Basic structure of the atom

Invisible momentum. Plug into

Affect the entire planet

General intelliegence

Telephone invented by two people at the same time

Somebody else would have come along w Einstein

Nuclear weapons

1950- Uber. Internet gps. Phone

Human intellectual labor

AGI – most transfornative technology

20 neurons

Stop thinking about yourself

At scale

Owned by the world

Billions of dollars

True mission – agi

For profit

Unduly concentrate power

Charter day to day

Self driving car

Shape how the world operates

Better performance much larger scaleb

Generate fake news

Fake politician content

Human ingenuity. Scalable. Compute. Data

Shape existing systems in a different way

Basic structure of the atom

Idea affect the whole planet

Will happen at some point

Nuclear weapons

Read all the scientific literature. Cure all the diseases. Material abundance. Wealth. Enable creativity

Most transfornative technology

Run larger neural nets

Missing ideas

Daring to dream

Benefits of artificial general intelligence for the world

Meh.

This is massive hubris that will end badly.

Watched

watched

Math – humanity's library

Most impactful for profit

Amount of value created

Shakespeare. Gpt. Playing dota

GAN

Working at scale

Reinforcement learning

How do you get neural networks to reason? – proving theorems

Security analysis

Physical robot

Cat vs dota simulator

Gtp 2

"Open"AI with closed source code. Isn't the fundamental concept of security to use open source, heavily scrutinized, software?

44:50 I'm shocked, Open AI was suppose to be open and share its AI developments with the world. But as soon as they develop anything really good they declare it unsafe to release, and therefor keep it private. If that is their policy, then there is nothing open about open AI. They are hypocrites, promoting the image of openness, while holding back and presumably benefiting from their best discoveries. Its fine if they want to keep their developments private, but don't also claim to be open at the same time.