H2O.ai

This presentation was filmed at the London Artificial Intelligence & Deep Learning Meetup: https://www.meetup.com/London-Artificial-Intelligence-Deep-Learning/events/245251725/.

Enjoy the slides: https://www.slideshare.net/0xdata/interpretable-machine-learning-using-lime-framework-kasia-kulma-phd-data-scientist.

– – –

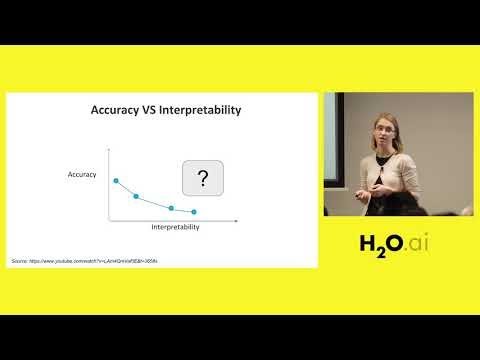

Kasia discussed complexities of interpreting black-box algorithms and how these may affect some industries. She presented the most popular methods of interpreting Machine Learning classifiers, for example, feature importance or partial dependence plots and Bayesian networks. Finally, she introduced Local Interpretable Model-Agnostic Explanations (LIME) framework for explaining predictions of black-box learners – including text- and image-based models – using breast cancer data as a specific case scenario.

Kasia Kulma is a Data Scientist at Aviva with a soft spot for R. She obtained a PhD (Uppsala University, Sweden) in evolutionary biology in 2013 and has been working on all things data ever since. For example, she has built recommender systems, customer segmentations, predictive models and now she is leading an NLP project at the UK’s leading insurer. In spare time she tries to relax by hiking & camping, but if that doesn’t work 😉 she co-organizes R-Ladies meetups and writes a data science blog R-tastic (https://kkulma.github.io/).

https://www.linkedin.com/in/kasia-kulma-phd-7695b923/

Source

awesome dear

Great talk. I'm excited to try out lime.

great

This is a wonderful presentation that touches such an important part of deep networks development. I just was wondering if LIME can be used to interpret time series classification problems and how it would look like?

Amazing presentation

Great speech

Well accomplished woman. What is she doing in London? She belongs in New York City or San Francisco

x1.5 makes it more understandable.

Great talk.

Hela?

I am beginning to work with H2O/lime. Thank you for providing the rational toward using this program.

She is so smart and make those nitty-gritty details really interesting through her sweet presentation

Great lecture Kasiu!

Well explained