Spark MIT

Code available: https://github.com/MIT-SPARK/Kimera

Paper: https://arxiv.org/abs/1910.02490

Kimera has also been used in:

– 3D Dynamic Scene Graphs:

Video: https://www.youtube.com/watch?v=SWbofjhyPzI&feature=youtu.be

Paper: https://arxiv.org/abs/2002.06289

For more robotics research follow: twitter.com/lucacarlone1

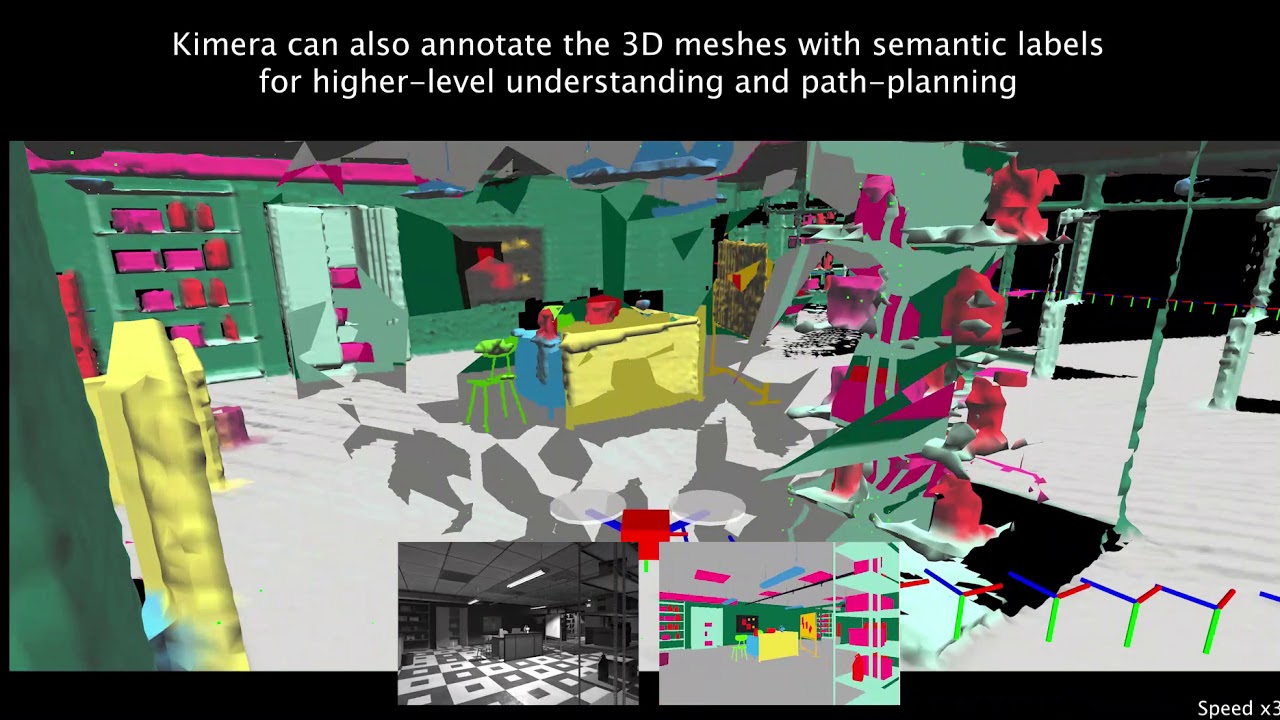

Abstract— We provide an open-source C++ library for real-time metric-semantic visual-inertial Simultaneous Localization And Mapping (SLAM). The library goes beyond existing visual and visual-inertial SLAM libraries (e.g., ORB-SLAM, VINS- Mono, OKVIS, ROVIO) by enabling mesh reconstruction and semantic labeling in 3D. Kimera is designed with modularity in mind and has four key components: a visual-inertial odometry (VIO) module for fast and accurate state estimation, a robust pose graph optimizer for global trajectory estimation, a lightweight 3D mesher module for fast mesh reconstruction, and a dense 3D metric-semantic reconstruction module. The modules can be run in isolation or in combination, hence Kimera can easily fall back to a state-of-the-art VIO or a full SLAM system. Kimera runs in real-time on a CPU and produces a 3D metric-semantic mesh from semantically labeled images, which can be obtained by modern deep learning methods. We hope that the flexibility, computational efficiency, robustness, and accuracy afforded by Kimera will build a solid basis for future metric-semantic SLAM and perception research, and will allow researchers across multiple areas (e.g., VIO, SLAM, 3D reconstruction, segmentation) to benchmark and prototype their own efforts without having to start from scratch. .

Can you elaborate on the following:

1) what's the point of showing trajectory optimisation at the end of video without ground truth/rough map?

2) with and without outlier rejection, what can we interpret from this comparison?

Thank you

really cool demo, I also test the dataset from euroc, and I find the output of odometry is around 4 HZ and the position update is delay around 1.5 s running on Thinkpad t470s(i5 7gen 8 GB), I would like to know if it is possible running onboard on quadcopter, and which kind of onboard computer is needed.

Awesome video!

I have many questions, but will ask only few 🙂 –

– Is it possible to accelerate this with GPU?

– Is inclusion of depth information from camera possible/trivial?

Can I build it for android?

Do you have any list of supported camera and imu