Jay Alammar

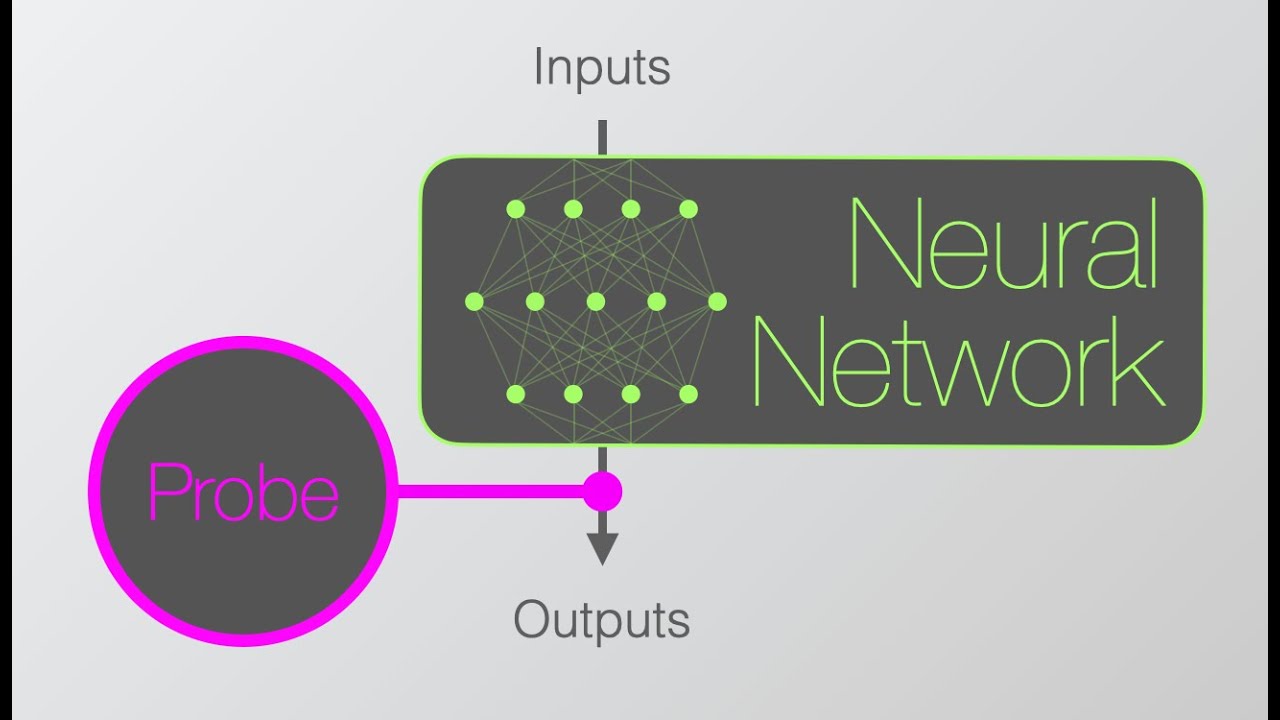

Probing Classifiers are an Explainable AI tool used to make sense of the representations that deep neural networks learn for their inputs. They allow us to understand if the numeric representation at the end (or in the middle) of the model encodes certain properties of the input which we are interested in (for example, whether an input token is a verb or a noun). Using probes, machine learning researchers gained a better understanding of the difference between models and between the various layers of a single model.

Introductions (0:00)

Motivation for probes in Machine Translation (0:40)

Probing sentence encoders (3:42)

How a probe is trained (5:32)

Probing token representations (8:08)

Size of probes (9:15)

Better metrics using Control Tasks (9:48)

Conclusion (10:32)

Explainable AI Cheat Sheet: https://ex.pegg.io/

1) Explainable AI Intro : https://www.youtube.com/watch?v=Yg3q5x7yDeM&t=0s

2) Neural Activations & Dataset Examples https://www.youtube.com/watch?v=y0-ISRhL4Ks

—–

What you can cram into a single $&!#* vector: Probing sentence embeddings for linguistic properties

https://www.aclweb.org/anthology/P18-1198/

Diagnostic classifiers: revealing how neural networks process hierarchical structure

http://ceur-ws.org/Vol-1773/CoCoNIPS_2016_paper6.pdf

Designing and Interpreting Probes with Control Tasks

https://www.aclweb.org/anthology/D19-1275/

Fine-grained Analysis of Sentence Embeddings Using Auxiliary Prediction Tasks

https://arxiv.org/abs/1608.04207

What do you learn from context? Probing for sentence structure in contextualized word representations

https://arxiv.org/abs/1905.06316

—–

Twitter: https://twitter.com/JayAlammar

Blog: https://jalammar.github.io/

Mailing List: http://eepurl.com/gl0BHL

——

More videos by Jay:

The Narrated Transformer Language Model

https://youtu.be/-QH8fRhqFHM

Jay’s Visual Intro to AI

https://www.youtube.com/watch?v=mSTCzNgDJy4

How GPT-3 Works – Easily Explained with Animations

https://www.youtube.com/watch?v=MQnJZuBGmSQ

Up and Down the Ladder of Abstraction [interactive article by Bret Victor, 2011]

https://www.youtube.com/watch?v=1S6zFOzee78

The Unreasonable Effectiveness of RNNs (Article and Visualization Commentary) [2015 article]

https://www.youtube.com/watch?v=o9LEWynwr6g