The news is awash with stories of platforms clamping down on misinformation and the angst involved in banning prominent members. But these are Band-Aids over a deeper issue — namely, that the problem of misinformation is one of our own design. Some of the core elements of how we’ve built social media platforms may inadvertently increase polarization and spread misinformation.

If we could teleport back in time to relaunch social media platforms like Facebook, Twitter and TikTok with the goal of minimizing the spread of misinformation and conspiracy theories from the outset … what would they look like?

This is not an academic exercise. Understanding these root causes can help us develop better prevention measures for current and future platforms.

Some of the core elements of how we’ve built social media platforms may inadvertently increase polarization and spread misinformation.

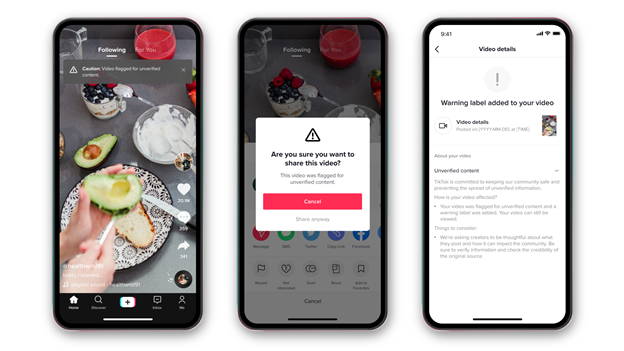

As one of the Valley’s leading behavioral science firms, we’ve helped brands like Google, Lyft and others understand human decision-making as it relates to product design. We recently collaborated with TikTok to design a new series of prompts (launched this week) to help stop the spread of potential misinformation on its platform.

Image Credits: Irrational Labs (opens in a new window)

The intervention successfully reduces shares of flagged content by 24%. While TikTok is unique amongst platforms, the lessons we learned there have helped shape ideas on what a social media redux could look like.

Create opt-outs

We can take much bigger swings at reducing the views of unsubstantiated content than labels or prompts.

In the experiment we launched together with TikTok, people saw an average of 1.5 flagged videos over a two-week period. Yet in our qualitative research, many users said they were on TikTok for fun; they didn’t want to see any flagged videos whatsoever. In a recent earnings call, Mark Zuckerberg also spoke of Facebook users’ tiring of hyperpartisan content.

We suggest giving people an “opt-out of flagged content” option — remove this content from their feeds entirely. To make this a true choice, this opt-out needs to be prominent, not buried somewhere users must seek it out. We suggest putting it directly in the sign-up flow for new users and adding an in-app prompt for existing users.

Shift the business model

There’s a reason false news spreads six times faster on social media than real news: Information that’s controversial, dramatic or polarizing is far more likely to grab our attention. And when algorithms are designed to maximize engagement and time spent on an app, this kind of content is heavily favored over more thoughtful, deliberative content.

The ad-based business model is at the core the problem; it’s why making progress on misinformation and polarization is so hard. One internal Facebook team tasked with looking into the issue found that, “our algorithms exploit the human brain’s attraction to divisiveness.” But the project and proposed work to address the issues was nixed by senior executives.

Essentially, this is a classic incentives problem. If business metrics that define “success” are no longer dependent on maximizing engagement/time on site, everything will change. Polarizing content will no longer need to be favored and more thoughtful discourse will be able to rise to the surface.

Design for connection

A primary part of the spread of misinformation is feeling marginalized and alone. Humans are fundamentally social creatures who look to be part of an in-group, and partisan groups frequently provide that sense of acceptance and validation.

We must therefore make it easier for people to find their authentic tribes and communities in other ways (versus those that bond over conspiracy theories).

Mark Zuckerberg says his ultimate goal with Facebook was to connect people. To be fair, in many ways Facebook has done that, at least on a surface level. But we should go deeper. Here are some ways:

We can design for more active one-on-one communication, which has been shown to increase well-being. We can also nudge offline connection. Imagine two friends are chatting on Facebook messenger or via comments on a post. How about a prompt to meet in person, when they live in the same city (post-COVID, of course)? Or if they’re not in the same city, a nudge to hop on a call or video.

In the scenario where they’re not friends and the interaction is more contentious, platforms can play a role in highlighting not only the humanity of the other person, but things one shares in common with the other. Imagine a prompt that showed, as you’re “shouting” online with someone, everything you have in common with that person.

Platforms should also disallow anonymous accounts, or at minimum encourage the use of real names. Clubhouse has good norm-setting on this: In the onboarding flow they say, “We use real names here.” Connection is based on the idea that we’re interacting with a real human. Anonymity obfuscates that.

Finally, help people reset

We should make it easy for people to get out of an algorithmic rabbit hole. YouTube has been under fire for its rabbit holes, but all social media platforms have this challenge. Once you click a video, you’re shown videos like it. This may help sometimes (getting to that perfect “how to” video sometimes requires a search), but for misinformation, this is a death march. One video on flat earth leads to another, as well as other conspiracy theories. We need to help people eject from their algorithmic destiny.

With great power comes great responsibility

More and more people now get their news from social media, and those who do are less likely to be correctly informed about important issues. It’s likely that this trend of relying on social media as an information source will continue.

Social media companies are thus in a unique position of power and have a responsibility to think deeply about the role they play in reducing the spread of misinformation. They should absolutely continue to experiment and run tests with research-informed solutions, as we did together with the TikTok team.

This work isn’t easy. We knew that going in, but we have an even deeper appreciation for this fact after working with the TikTok team. There are many smart, well-intentioned people who want to solve for the greater good. We’re deeply hopeful about our collective opportunity here to think bigger and more creatively about how to reduce misinformation, inspire connection and strengthen our collective humanity all at the same time.

![]()

Walter Thompson

Source link