Stanford Computational Imaging Lab

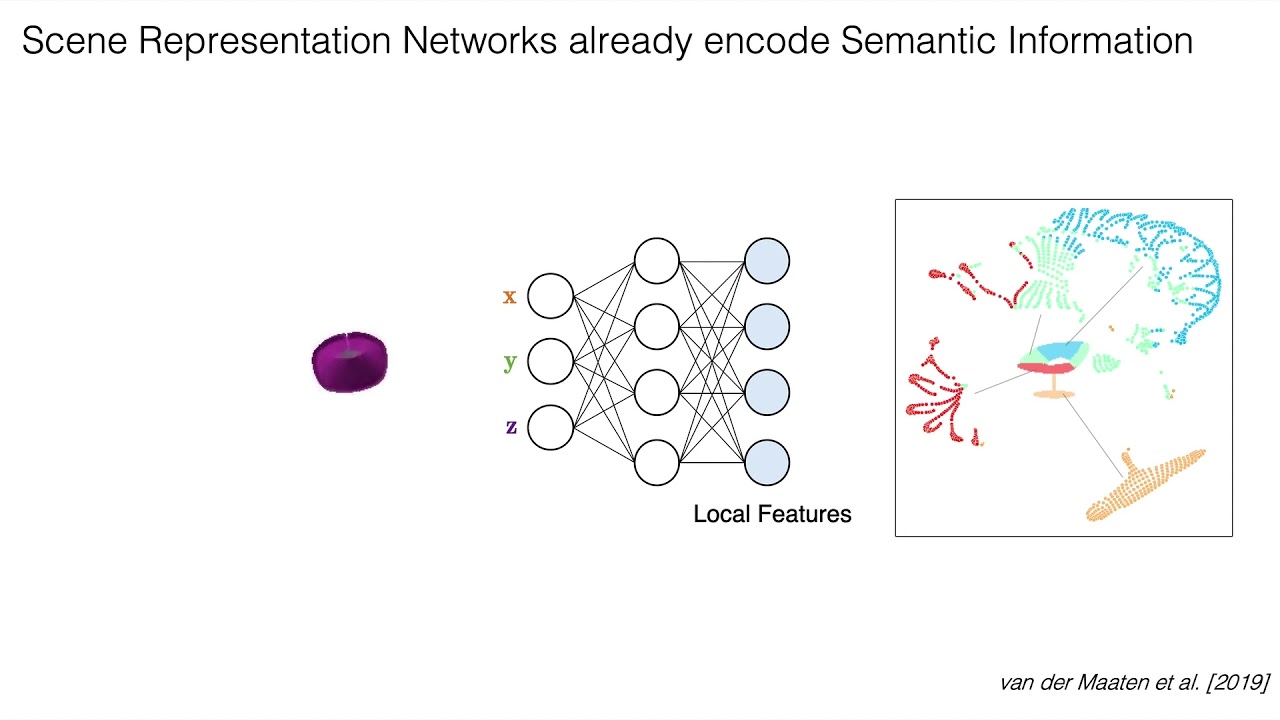

Biological vision infers multi-modal 3D representations that support reasoning about scene properties such as materials, appearance, affordance, and semantics in 3D. These rich representations enable us humans, for example, to acquire new skills, such as the learning of a new semantic class, with extremely limited supervision. Motivated by this ability of biological vision, we demonstrate that 3D-structure-aware representation learning leads to multi-modal representations that enable 3D semantic segmentation with extremely limited, 2D-only supervision. Building on emerging neural scene representations, which have been developed for modeling the shape and appearance of 3D scenes supervised exclusively by posed 2D images, we are the first to demonstrate a representation that jointly encodes shape, appearance, and semantics in a 3D-structure-aware manner. Surprisingly, we find that only a few tens of labeled 2D segmentation masks are required to achieve dense 3D semantic segmentation using a semi-supervised learning strategy. We explore two novel applications for our semantically aware neural scene representation: 3D novel view and semantic label synthesis given only a single input RGB image or 2D label mask, as well as 3D interpolation of appearance and semantics.

Semantic Implicit Neural Scene Representations with Semi-supervised Training | 3DV 2020