Presentation slides: http://www.slideshare.net/SessionsEvents/ted-willke-sr-principal-engineer-intel

Can Cognitive Neuroscience Provide a Theory of Deep Learning Capacity?: Deep neural networks have achieved learning feats for video, image, and speech recognition that leave other techniques far behind. For example, the error rate on the ImageNet 2012 object recognition challenge was halved with the introduction of deep convolutional nets and now they dominate these competitions. At the same time, the industry is busy putting them to use on applications spanning autonomous driving to product recommenders and researchers continue to propose more elaborate topologies and intricate training techniques. But our theoretical understanding of how these networks encode representations of the “things they see” is far behind, as is our understanding of their limitations.

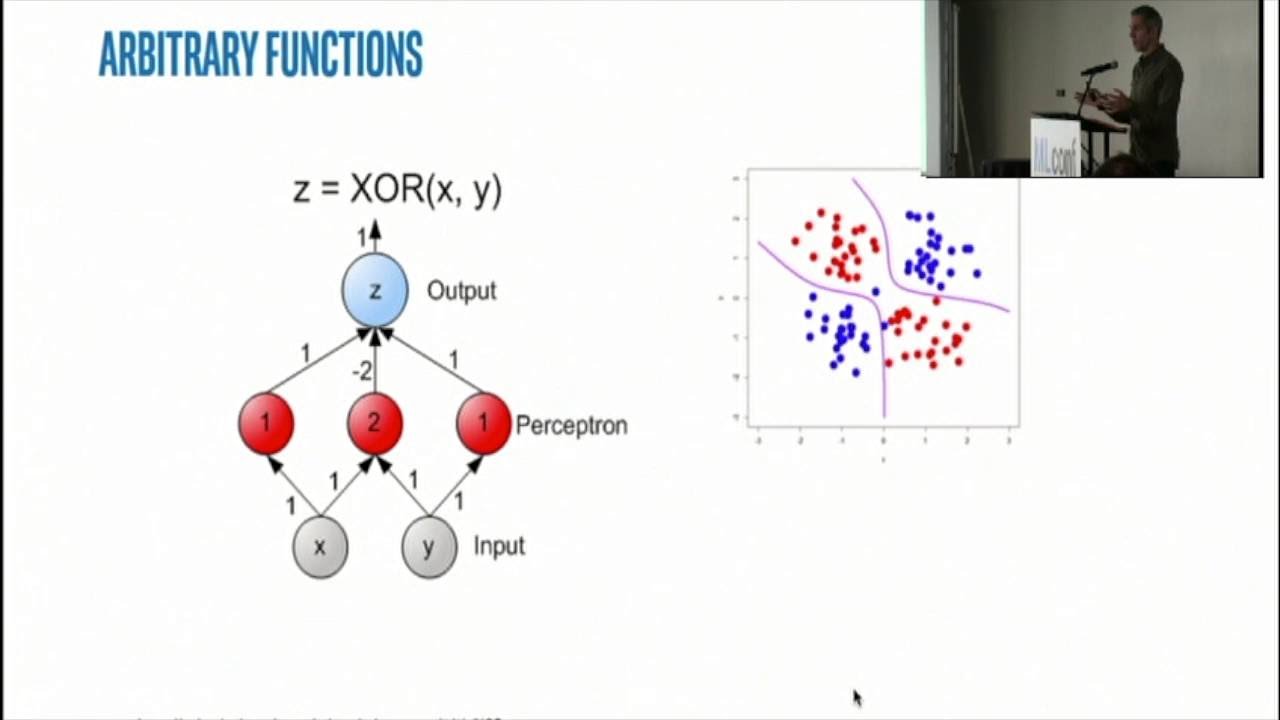

To advance deep neural network design from “black magic” to an engineering problem, we need to understand the impact that the choice of topology and parameters have on learnt representations and the processing that a network is capable of. How many representations can a given network store? How does representation “reuse” impact learning rate and learning capacity? How many tasks can a given network perform?

In this talk, I’ll describe why the human brain, with its seemingly unlimited parallel distributed processing, is downright terrible at multi-tasking and why this is totally logical. And I’ll describe the theoretical implications this may have for artificial neural networks. I’ll also describe very recent work that sheds some light on how representations are encoded and how our research team is extending this work to create practical best practices for network design.

MLconf

Source