Vsauce2

Watch my newest video, “The Demonetization Game”: https://youtu.be/kOnEEeHZp94

Install Raid for Free IOS: http://bit.ly/2HtjCLc ANDROID: http://bit.ly/2Th4Nga

Get 50k silver immediately and a free Epic Champion as part of the new player program. Thanks for supporting, Vsauce2.

MONTAGE SONG: “Synthetic Life” by Julian Emery, James Hockley & Adam Noble

https://www.youtube.com/watch?v=KV6XksdotX8

By the 1950s, science fiction was beginning to become reality: machines didn’t just calculate; they began to learn. Machine calculating was out. Machine learning was in. But we had to start small.

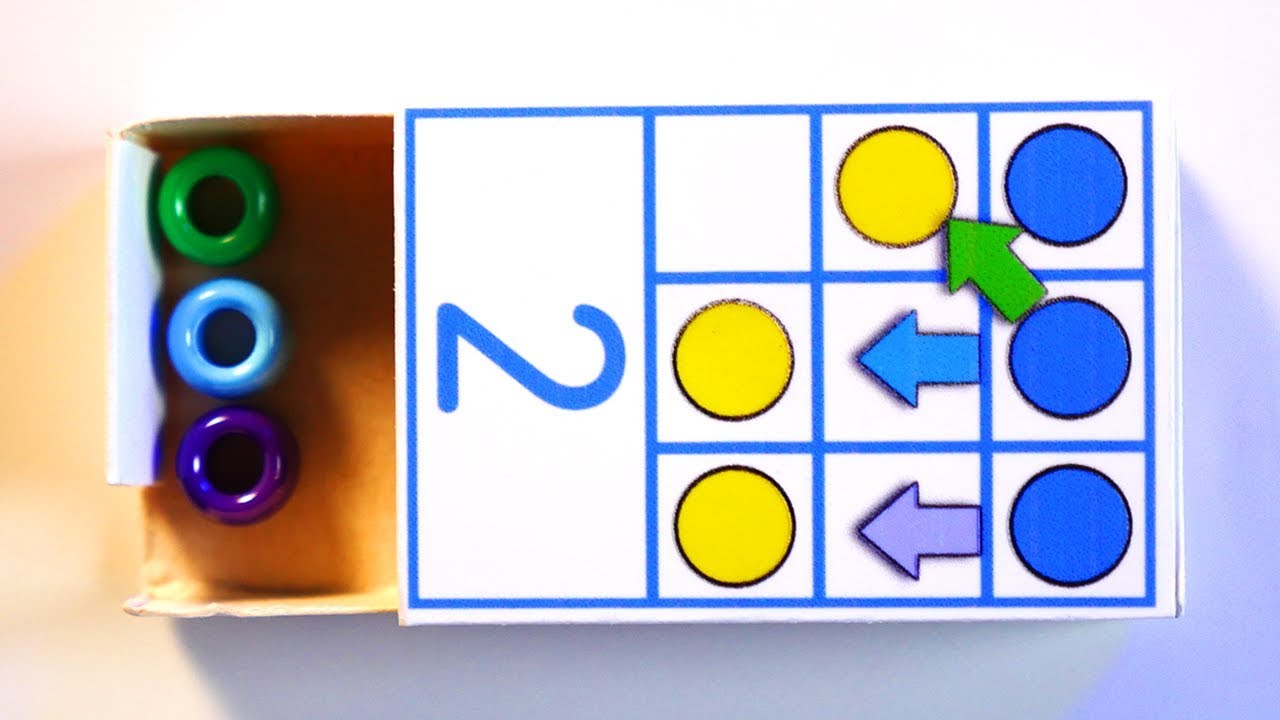

Donald Michie’s “Machine Educable Noughts And Crosses Engine” — MENACE — was composed of 304 separate matchboxes that each depicted a possible state of a checker game. MENACE eventually learned to play perfectly, and we replicate and explain that process with Shreksapawn, our adaptation of Martin Gardner’s MENACE-inspired game of Hexapawn.

The goal of MENACE and Hexapawn was to determine how to get machines to learn, and eventually to get them to think. As we realized how the simplest computers could learn to play games perfectly, we may have stumbled on the secret to humans playing the game of life perfectly… or at least getting a tiny bit closer to perfection every day.

By harnessing math, the human intellect, and a bag full of crafting supplies, we can gain just a little glimpse into how intelligence actually works — whether it’s human or artificial.

*** SOURCES ***

“How to Build a Game-Learning Machine and Then Teach It to Play and Win,” Martin Gardner, Scientific American: http://cs.williams.edu/~freund/cs136-073/GardnerHexapawn.pdf

Matthew Scroggs’ MENACE Simulator: http://www.mscroggs.co.uk/menace/

MENACE: Machine Educable Noughts And Crosses Engine: http://www.mscroggs.co.uk/blog/19

*** LINKS ***

Vsauce2 Links

Twitter: https://twitter.com/VsauceTwo

Facebook: https://www.facebook.com/VsauceTwo

Hosted, Produced, And Edited by Kevin Lieber

Instagram: http://instagram.com/kevlieber

Twitter: https://twitter.com/kevleeb

Research And Writing by Matthew Tabor

https://twitter.com/matthewktabor

VFX By Eric Langlay

https://www.youtube.com/c/ericlanglay

Huge Thanks To Paula Lieber

https://www.etsy.com/shop/Craftality

Get Vsauce’s favorite science and math toys delivered to your door!

https://www.curiositybox.com/

Select Music By Jake Chudnow: http://www.youtube.com/user/JakeChudnow

MY PODCAST — THE CREATE UNKNOWN

https://www.youtube.com/thecreateunknown

#education #vsauce

Source

I wanted to respond to two types of comments that have appeared more than once. I read all the comments and really appreciate when you all dig deep into these topics.

First, we're missing some matchboxes because we don't actually need them! Some matchboxes work for two scenarios — once for the board position they display, and also for the board position that is a mirror image of it. The computer learns both board positions at the same time, but yes, at first glance it appears as though I just left some out. Martin Gardner didn't think they were necessary, either.

Second, Hexapawn is a much simpler version of chess, so terms like "checkmate" and "stalemate" aren't exactly the same. They're simpler, too. In chess, checkmate is achieved when there is no way for your opponent to move without the king being captured. A stalemate occurs when a player has no legal move. A stalemate results in a draw.

So, when that occurs in Hexapawn, it has the trappings of a stalemate but has the result and the spirit of a checkmate — the win is awarded to the player who moves in a way that creates a stalemate for their opponent. Because the situation results in a win instead of a draw, I thought it was more appropriate to compare it to checkmate, though it may have been clearer to avoid the language of "checkmate" entirely.

FODASE

Please don't play that awful music ever again.

This is why AI isn't conscious. It is just a machine that works on the matchbox mechanism, only amplified using electrical signals and logic gates.

Wish my DOTA 2 team mates were as smart as these match boxes

3:34 Market Gardener?

that got personal real fast…

what if you made a computer simulation of this, started by yourself, and then replaced yourself with another one of the AIs.

better yet, imagine making a chess version of this

My referral link to the game! It will give you a boost start boyz

@t

My referral link to the game! It will give you a boost start boyz

@t

My referral link to the game! It will give you a boost start boyz

@t

My referral link to the game! It will give you a boost start boyz

@t

My referral link to the game! It will give you a boost start boyz

@t

Great music!

9:04 To all the PC parents, don't simply reward your kids, punish them as well. But not abusively! Like you all assume the word punishment means… weirdos.

Gotcha…

I don't get it

2:57 You mean STALEmate, I CAN’T BELIEVE A SEVENTEEN YEAR OLD CORRECTS YOU ON THAT!!

I literally got an ad on AI and machine learning in the beginning of the video by Coursera.

?♂️?

🙂

HEY NOW!!! YOU'RE AN ALLSTAR!!! GET YOUR GAME ON!!!

2:58 you said checkmate but you were describing stalemate

Edit: I just red your comment woops

Me:goes to get a bunch of matchboxes

And… SHREK

here's a question, who dislikes this? i mean really? what are they even watching this for?

so, it would be possible to create a perfect computer for chess out of… how many thousands of gigantic matchboxes?

I'm 12 years old and love your videos keep producing the content?

Bottom row, 5th matchbox

Attomatic loss for AI

Bead removed

If that scenario happens again, then what?

similar to others

Sherk is god

lemme take my lil matchbox computer to a worldwide hexapawn championship

Let’s say when you took out the orange and blue bead, you lost.

Do you take out both beads, or only one???

6:31

Oh no… Don't let Kevin win this round…

Nobody:

Me: Is this machine learning?

5:06

That video was quite the emotional rollercoaster

So basically if you learn all the moves that are made by computer when it gets perfected(when it always wins)

You could never lose to another human if you go second

Only 2 round 2 matchboxes? Then what if i move the farthest right pawn?

Best video about AI EVER!!

It wasn't clear to me, but I presume you are only removing the last bead when it loses (vs the preceding bead(s)). Also, in your alternate reward version, are you adding beads along the entire decision path?

And finally a question for your reward version: what if you took the number of moves to the winning move (say, 3 moves) and placed n! beads in reverse order (so for 3 moves, 6 beads added: 3 to the last move, 2 to the previous move and 1 to the initial move) giving much more weight much more rapidly to those paths?

And last question because I find this very interesting (glad I found this channel): it seems to me that the simplification is great to help understand decision trees but that the moment you go up an order, you have to change your evaluation of paths because an initial move may be for a branch of all losing paths down the tree so could you end up with a dead-end path at the third move? I guess that would represent a resignation? I don't know if I'm thinking about this correctly, I'm no mathematician.

What would happen if you had 2 computers playing against each other? You would have to always make the first move, but then just let it play out

touches stove

remove bead

get icecream because i touched stove

add bead

/life,

Perfect definitive proof that bullying is good for society…. To a certain degree

Fantastic video