IBM Research

By: Pin-Yu.Chen, IBM Research

April 22, 2019

NeurIPS Paper : NeurIPS 2018 –https://www.research.ibm.com/artificial-intelligence/publications/paper/?id=Efficient-Neural-Network-Robustness-Certification-with-General-Activation-Functions)

Abstract:

Finding minimum distortion of adversarial examples and thus certifying robustness in neural networks classifiers is known to be a challenging problem. Nevertheless, recently it has been shown to be possible to give a non-trivial certified lower bound of minimum distortion, and some recent progress has been made towards this direction by exploiting the piece-wise linear nature of ReLU activations. However, a generic robustness certification for extit{general} activation functions still remains largely unexplored. To address this issue, in this paper we introduce CROWN, a general framework to certify robustness of neural networks with general activation functions. The novelty in our algorithm consists of bounding a given activation function with linear and quadratic functions, hence allowing it to tackle general activation functions including but not limited to the four popular choices: ReLU, tanh, sigmoid and arctan. In addition, we facilitate the search for a tighter certified lower bound by adaptively selecting appropriate surrogates for each neuron activation. Experimental results show that CROWN on ReLU networks can notably improve the certified lower bounds compared to the current state-of-the-art algorithm Fast-Lin, while having comparable computational efficiency. Furthermore, CROWN also demonstrates its effectiveness and flexibility on networks with general activation functions, including tanh, sigmoid and arctan. To the best of our knowledge, CROWN is the first framework that can efficiently certify non-trivial robustness for general activation functions in neural networks.

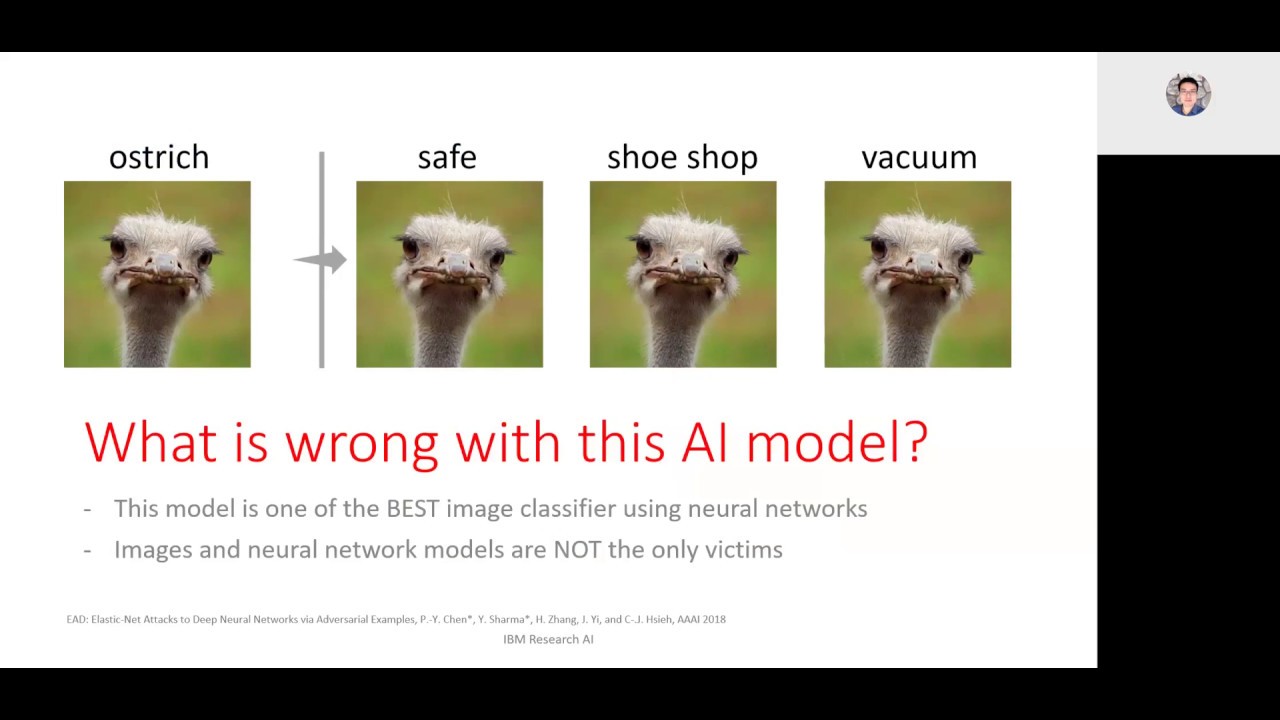

This talk will present recent progress from our research team on adversarial robustness of AI models, including attack, defense, and certification methods. The presentation will focus on our recent publications from AAAI 2019, ICLR 2019, AAAI 2018, ACL 2018, ICLR 2018, ECCV 2018 and NeurIPS 2018. We will introduce query-efficient and practical black-box adversarial attacks (i.e. AutoZOOM and hard-label attack), an effective defense to audio adversarial examples (i.e. temporal dependency), and efficient robustness evaluation and certification tools (i.e. CROWN and CNN-Cert)

bio: Pin-Yu Chen is a research staff member of IBM Research AI. He is also a PI of ongoing MIT-IBM Watson AI Lab research projects. His recent research focus has been on adversarial machine learning and studying the robustness of neural networks. His research interest includes graph learning, network data analytics and their applications to data mining, machine learning, signal processing, and cyber security. More information can be found at www.pinyuchen.com

Source